Spark Tutorial

Home Data Science Data Science Tutorials Spark Tutorial

Basics

PySpark

Spark Tutorial

Apache spark is one of the largest open-source projects used for data processing. Spark is a lightning-fast and general unified analytical engine in big data and machine learning. It supports high-level APIs in a language like JAVA, SCALA, PYTHON, SQL, and R. It was developed in 2009 in the UC Berkeley lab, now known as AMPLab. As spark is the engine used for data processing, it can be built on top of Apache Hadoop, Apache Mesos, and Kubernetes, standalone, and on the cloud like AWS, Azure, or GCP, which will act as data storage.

Apache spark has its own stack of libraries like Spark SQL, DataFrames, Spark MLlib for machine learning, and GraphX graph computation. Streaming this library can be combined internally in the same application.

Why Do We Need to Learn Spark?

In today's era, data is the new oil, but data exists in structured, semi-structured, and unstructured forms. Apache Spark achieves high performance for batch and streaming data. Big internet companies like Netflix, Amazon, Yahoo, and Facebook have started using spark for deployment and using a cluster of around 8000 nodes to store petabytes of data. Technology is moving ahead daily, and to keep up with the same Apache spark is a must. Below are some reasons to learn:

- Spark is 100 times faster in memory than MapReduce, and it can integrate with the Hadoop ecosystem easily; hence the use of spark is increasing in big and small companies. Moreover, as it is open-source, most organizations have already implemented spark.

- Data is generated from mobile apps, websites, IOTs, sensors, etc., and this huge data is difficult to handle and process. Spark provides real-time processing of this data. Furthermore, spark has speed and ease of use with Python and SQL language; hence most machine learning engineers and data scientists prefer spark.

- Spark programming can be done in Java, Python, Scala, and R, and most professional or college student has prior knowledge. Prior knowledge helps learners create spark applications in their known language. Also, the scale at which spark has developed is supported by Java. Also, 100-200 lines of code written in Java for a single application can be converted to

- Spark professional has a high demand in today's market, and the recruiter is ready to bend some rules by providing a high salary to spark developers. As there are high demand and low supply in Apache spark professionals, It is the right time to get into this technology to earn big bucks.

Applications of Spark

Apache spark ecosystem is used by industry to build and run fast big data applications. Here are some applications of sparks:

- In the e-commerce industry: To analyze the real-time transaction of a product, customers, and sales in-store. This information can be passed to different machine learning algorithms to build a recommendation model. This recommendation model can be developed based on customer comments and product reviews, and the industry can form new trends.

- In the gaming industry: As a spark process, real-time data programmers can deploy models in a minute to build the best gaming experience. Analyze players and their behavior to create advertising and offers. Also, spark a use to build real-time mobile game analytics.

- In Financial Services: Apache spark analysis can be used to detect fraud and security threats by analyzing a huge amount of archived logs and combining this with external sources like user accounts, and internal information Spark stack could help us to get top-notch results from this data to reduce risk in our financial portfolio

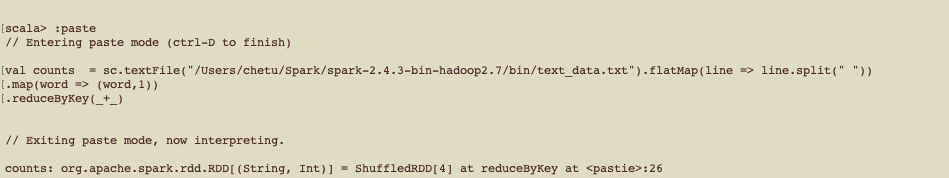

Example (Word Count Example)

In this example, we are counting the number of words in a text file:

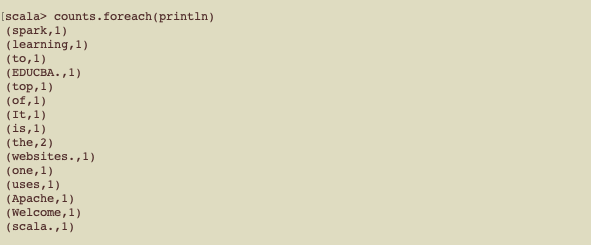

Output:

Pre-requisites

To learn Apache Spark programmer needs prior knowledge of Scala functional programming, Hadoop framework, Unix Shell scripting, RDBMS database concepts, and Linux operating system. Apart from this, knowledge of Java can be useful. If one wants to use Apache PySpark, then python knowledge is preferred.

Target Audience

The Apache spark tutorial is for analytics and data engineering professionals. Also, professionals aspiring to become Spark developers by learning spark frameworks from their respective fields, like ETL developers and Python Developers, can use this tutorial to transition into big data.

Let’s Get Started

By signing up, you agree to our Terms of Use and Privacy Policy.

Let’s Get Started

By signing up, you agree to our Terms of Use and Privacy Policy.

Watch our Demo Courses and Videos

Valuation, Hadoop, Excel, Web Development & many more.

EDUCBA Login

This website or its third-party tools use cookies, which are necessary to its functioning and required to achieve the purposes illustrated in the cookie policy. By closing this banner, scrolling this page, clicking a link or continuing to browse otherwise, you agree to our Privacy Policy