Introduction to Spark Submit

The Apache Spark Web UI is used in providing necessary information about your application and also understanding how an application is executing on a Hadoop cluster. A suite of web User Interfaces (UI) will be provided by Apache Spark. These will help in monitoring the resource consumption and status of the Spark cluster. The user interface web of Spark Submit gives information regarding – The scheduler stages and tasks list, Environmental information, Memory and RDD size summary, Running executor’s information.

Let us understand all these one by one in detail.

Syntax

The syntax for Apache Spark Submit (How to submit Spark Applications):

spark - submit - option value \ application jar | python file (application arguments)

How Apache Spark Web User Interface Works?

When SparkPi is run on YARN, it demonstrates how to sample applications, packed with Spark and SparkPi run and the value of pi approximation computation is seen.

Use the subscript = spark-submit for submitting an application of a file in Python or a packaged java or a compiled or a Spark JAR.

The command options on Spark Submit are as follows:

| Option | Description |

| class | the main method is in the fully classified class name for all the scala and java applications. |

| conf | key = value is the format of a spark configuration property. Space containing values have quotes surrounded by them like- “value-keys” |

| driver-class-path | Classpath and configuration entries to pass to the driver. –jars added to JARs, are automatically included in the classpath. |

| driver-cores | Describes the number of cores used in the mode – cluster. 1- default |

| driver-memory | Heap maximum size and is represented as String, for eg – 2g, etc., and allocated to the driver. spark.driver.memory property can also be used instead of |

| files | A list of files is seen separated by commas and are placed in each working directory of executor. The path must be pointing to the local file for client deployment mode. And a local or an URL globally visible file inside the cluster is to be seen in deployment mode. |

| jars | In the classpath of drovers, additional JARs are to be seen and executors in the cluster mode or in the client mode of executor classpath. |

| master | Application run location. |

| packages | The Maven coordinate list is seen, separated by commas of JARs to include on executor and driver classpath. The maven central, local maven, and remote repositories specified in the same are searched in that order. |

| py-files | .py, .zip, .egg files of comma-separated lists to place on PYTHONPATH. The path must be pointed to a local file for the deployment client mode. The path can be pointing to either a globally visible inside your cluster, or a local file, for a cluster deployment mode. |

| repositories | The Maven coordinates specified in packages are searched for a comma-separated list of remote repositories. |

Master values:

| Master | Description |

| local | With no parallelism, run the park in local with one worker thread being one in number. |

| local [K] | With the number of cores set ideally on the host, run the spark locally with k numbered worker threads. |

| local [*] | Take as many local threads as logical cores on the host and run the spark locally. |

| yarn | Use the YARN cluster manager to run this. The location of the cluster I determined by directory for Hadoop configuration – HADOOP_CONF_DIR or the directory for yarn configuration – YARN_CONF_DIR |

Example to Implement Spark Submit

Below is the example mentioned:

Example #1

Run the spark-submit application in the spark-submit.sh crit in any of your local shells. The log file list that is generated gives the steps taken by spark-submit.sh script and is located where the script is run. (Try with status parameter running the same below script)

Code:

./spark-submit.sh \

--vcap ./vcap.json \

--deploy-mode cluster \

--conf spark.service.spark_version=2.1 \

--class org.apache.spark.examples.SparkPi \

sparkpi_2.10-1.0.jar

Run the spark-submit application in the spark-submit.sh by using the status parameter. A message finished or failed or error is raised, only then the status stops running or otherwise is queried continuously. ctrl-c can be used to stop running the application or the polling status. Yes is for job cancellation and no is for either to stop polling as a considerable yes or to continue the job running and status polling until finishing the same.

./spark-submit.sh \

--vcap ./vcap.json \

--conf spark.service.spark_version=2.1 \

--status driver-20180117092338-0726-99a44999-f636-46ea-b7f2-b63d530e89e0

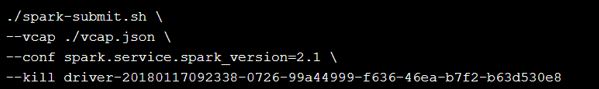

Kill parameter is used to cancel the running job by spark-submit.sh application.

./spark-submit.sh \

--vcap ./vcap.json \

--conf spark.service.spark_version=2.1 \

--kill driver-20180117092338-0726-99a44999-f636-46ea-b7f2-b63d530e89e0

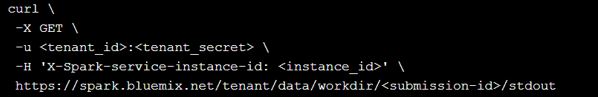

Output:

Conclusion

We have seen the concept of Apache Spark Submit. Using this makes you overtake a great advantage which is powerful on-demand cloud processing. A cost-effective, fast, and loading the data on your own into an object store in the IBM cloud.

Recommended Articles

This is a guide to Spark Submit. Here we discuss introduction to Spark Submit, syntax, how does it work, examples for better understanding. You can also go through our other related articles to learn more –