Updated June 8, 2023

Introduction to AdaBoost Algorithm

An AdaBoost algorithm can be used to boost the performance of any machine learning algorithm. Machine Learning has become a powerful tool that can make predictions based on a large amount of data. It has become so popular in recent times that the application of machine learning can be found in our day-to-day activities. A common example is getting suggestions for products while shopping online based on past items bought by the customer. Machine Learning, often referred to as predictive analysis or predictive modeling, can be defined as the ability of computers to learn without being programmed explicitly. Instead, it uses programmed algorithms to analyze input data to predict output within an acceptable range.

What is AdaBoost Algorithm?

In machine learning, boosting originates from whether a set of weak classifiers could be converted into a strong classifier. A weak learner or classifier is a learner who is better than random guessing. This approach resists overfitting, as in a large set of weak classifiers, each weak classifier performs better than random. We typically use a simple threshold on a single feature as a weak classifier. If the feature exceeds the threshold, we predict it as positive; otherwise, we classify it as negative.

AdaBoost stands for ‘Adaptive Boosting,’ which transforms weak learners or predictors into strong predictors to solve classification problems.

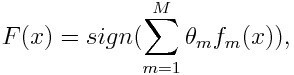

For classification, the final equation can be put as below:

Here fm designates the mth weak classifier, and m represents its corresponding weight.

How does the AdaBoost Algorithm Work?

AdaBoost can be used to improve the performance of machine learning algorithms. We use it best with weak learners, and these models exceed random chances of achieving high accuracy on a classification problem. The common algorithms with AdaBoost used are decision trees with level one. A weak learner is a classifier or predictor which performs relatively poorly in terms of accuracy. It implies that weak learners are easy to compute. By using boosting, we can combine multiple instances of algorithms to create a strong classifier.

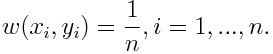

If we take a data set containing n number of points and consider the below

-1 represents a negative class, and 1 indicates a positive. It is initialized as below, the weight for each data point as:

If we consider iteration from 1 to M for m, we will get the below expression:

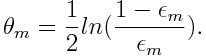

First, we must select the weak classifier with the lowest weighted classification error by fitting the weak classifiers to the data set.

Then calculate the weight for the mth weak classifier as below:

The weight is positive for any classifier with an accuracy higher than 50%. The weight becomes larger if the classifier is more accurate, and it becomes negative if the classifier has an accuracy of less than 50%. You can combine the prediction by inverting the sign. By inverting the sign of the prediction, a classifier with a 40% accuracy can be converted into a 60% accuracy. So the classifier contributes to the final prediction, even though it performs worse than random guessing. However, the final prediction will not have any contribution or get information from the classifier with precisely 50% accuracy.

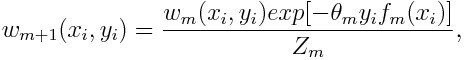

The exponential term in the numerator is always greater than 1 for a mis-classified case from the positive weighted classifier. After each iteration, the system updates misclassified cases with larger weights. The negative weighted classifiers behave the same way. However, after inverting the sign, what were originally correct classifications turn into misclassifications. You can calculate the final prediction by considering each classifier and then summing their weighted predictions.

Updating the weight for each data point as below:

Zm is here the normalization factor. It ensures that the sum total of all instance weights equals 1.

What is AdaBoost Algorithm Used for?

You can use AdaBoost for face detection, as it has become the standard algorithm for detecting faces in images. It uses a rejection cascade consisting of many layers of classifiers. The system rejects it if no layer recognizes the detection window as a face. The first classifier in the window discards the negative window keeping the computational cost to a minimum. While AdaBoost combines weak classifiers, it also uses its principles to identify the best features for each layer of the cascade.

Pros and Cons

One of the many advantages of the AdaBoost Algorithm is it is fast, simple, and easy to program. It also offers the flexibility to combine with any machine learning algorithm, requiring no parameter tuning except for T. It has been extended to learning problems beyond binary classification, and it is versatile as it can be used with text or numeric data.

AdaBoost also has a few disadvantages, such as it is from empirical evidence and is particularly vulnerable to uniform noise. Weak classifiers being too weak can lead to low margins and overfitting.

Example

We can consider an example of admission of students to a university where they will be admitted or denied. You can find both quantitative and qualitative data from different aspects here. For example, the admission result, which might be yes/no, can be quantitative, whereas any other area, like the skills or hobbies of students, can be qualitative. If a student excels in a particular subject, we can quickly and accurately classify the training data, surpassing the mere chance of admission. But it is difficult to find highly accurate predictions, and weak learners come into the picture.

Conclusion

AdaBoost selects the training set for each new classifier based on the results of the previous one. By combining the results, we determine how much weight to give each classifier’s proposed answer. It combines the weak learners to create a strong one to correct classification errors which is also the first successful boosting algorithm for binary classification problems.

Recommended Articles

This has been a guide to AdaBoost Algorithm. Here we discussed the basic concept, uses, working, pros, and cons with an example of the AdaBoost Algorithm. You can also go through our other suggested articles to learn more –