Updated March 22, 2023

Introduction to ETL Process

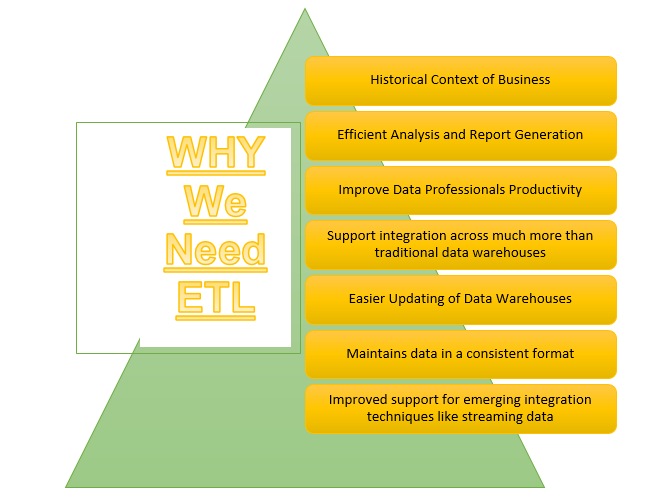

ETL is one of the important processes required by Business Intelligence. Business Intelligence relies on the data stored in data warehouses from which many analyses and reports are generated which helps in building more effective strategies and leads to tactical, and operational insights and decision-making. ETL refers to the Extract, Transform, and Load process. It is a kind of data integration step where data coming from different sources gets extracted and sent to data warehouses. Data is extracted from various resources first gets transformed to convert it into a specific format according to business requirements.

Various tools that help to perform these tasks are:

- IBM DataStage

- Abinitio

- Informatica

- Tableau

- Talend

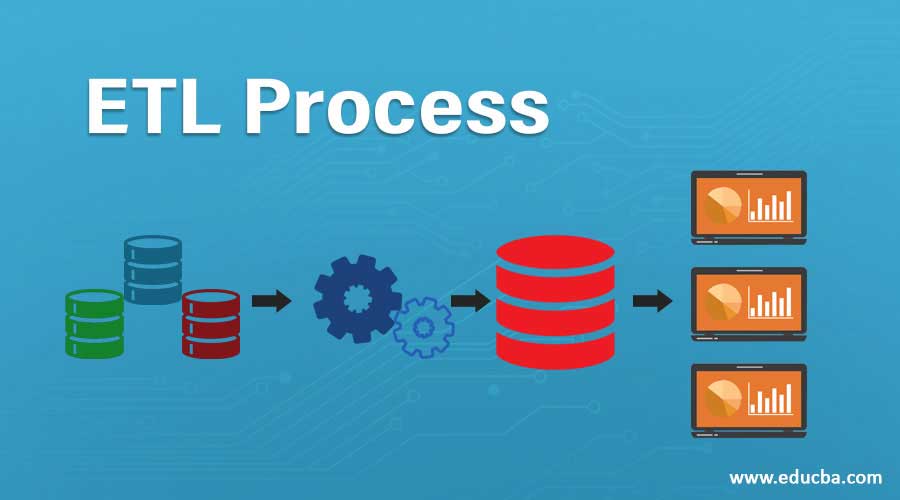

ETL Process

How does it Work?

The ETL process is a 3-step process that starts with extracting the data from various data sources and then raw data undergoes various transformations to make it suitable for storing in data warehouse and load it in data warehouses in the required format and make it ready for analysis.

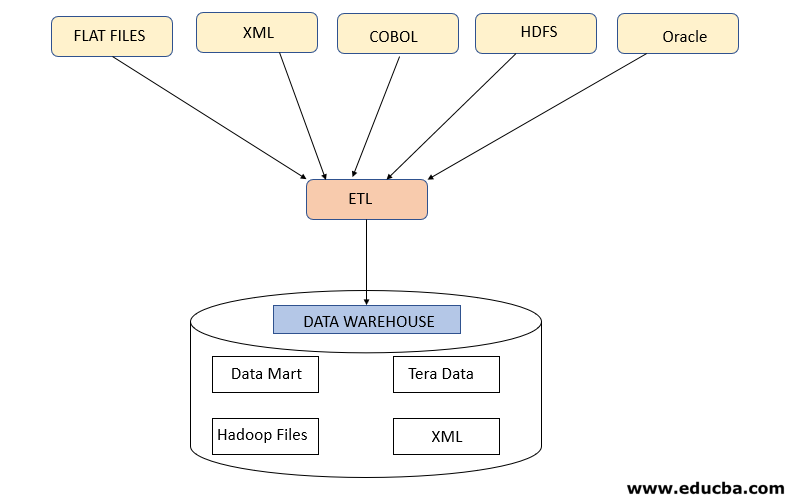

Step 1: Extract

This step refers to fetching the required data from various sources that are present in different formats such as XML, Hadoop files, Flat Files, JSON, etc. The extracted data is stored in the staging area where further transformations are being performed. Thus, data is checked thoroughly before moving it to data warehouses otherwise it will become a challenge to revert the changes in data warehouses. A proper data map is required between source and target before data extraction occurs as the ETL process needs to interact with various systems such as Oracle, Hardware, Mainframe, real-time systems such as ATM, Hadoop, etc. while fetching data from these systems.

But one should take care that these systems must remain unaffected while extraction.

Data Extraction Strategies:

- Full Extraction: This is followed when whole data from sources get loaded into the data warehouses that show either the data warehouse is being populated the first time or no strategy has been made for data extraction.

- Partial Extraction (with update notification): This strategy is also known as delta, where only the data being changed is extracted and update data warehouses.

- Partial Extraction (without update notification): This strategy refers to extract specific required data from sources according to load in the data warehouses instead of extracting whole data.

Step 2: Transform

This step is the most important step of ETL. In this step many transformations are performed to make data ready for load in data warehouses by applying the below transformations:

a. Basic Transformations: These transformations are applied in every scenario as they are basic need while loading the data that has been extracted from various sources, in the data warehouses.

- Data Cleansing or Enrichment: It refers to cleaning the undesired data from the staging area so that wrong data doesn’t get loaded from the data warehouses.

- Filtering: Here we filter out the required data out of a large amount of data present according to business requirements. For example, for generating sales reports one only needs sales records for that specific year.

- Consolidation: Data extracted are consolidated in the required format before loading it into the data warehouses.4.

- Standardizations: Data fields are transformed to bring it in the same required format for eg, the data field must be specified as MM/DD/YYYY.

b. Advanced Transformations: These types of transformations are specific to the business requirements.

- Joining: In this operation, data from 2 or more sources are combined t generate data with only desired columns with rows that are related to each other

- Data Threshold Validation Check: Values present in various fields are checked if they are correct or not such as not null bank account numbers in case of bank data.

- Use Lookups to Merge Data: Various flat files or other files are used to extract the specific information by performing lookup operation on that.

- Using any Complex Data Validation: Many complex validations are applied to extract valid data only from the source systems.

- Calculated and Derived values: Various calculations are applied to transform the data into some required information

- Duplication: Duplicate data coming from the source systems are analyzed and removed before loading it in the data warehouses.

- Key Restructuring: In the case of capturing slowly changing data, various surrogate keys need to be generated to structure the data in the required format.

MPP-Massive Parallel Processing is used sometimes to perform some basic operations such as filtering or cleansing on data in the staging area to process a large amount of data faster.

Step 3: Load

This step refers to loading the transformed data into the data warehouse from where it can be used to generate many analytics decisions as well as reporting.

- Initial Load: This type of load occurs while loading data in data warehouses for the first time.

- Incremental Load: This is the type of load that is done to update the data warehouse on a periodic basis with changes occurring in source system data.

- Full Refresh: This type of load refers to the situation when complete data of the table is deleted and loaded with fresh data.

The data warehouse then allows OLAP or OLTP features.

Disadvantages of ETL Process

Below are the disadvantages mentioned:

- Increasing Data: There is a limit to data being extracted from various sources by the ETL tool and pushed to data warehouses. Thus with the increase of data, working with the ETL tool and data warehouses become cumbersome.

- Customization: This refers to the fast and effective solutions or responses to the data generated by source systems. But using the ETL tool here slows down this process.

- Expensive: Using a data warehouse to store an increasing amount of data being generated periodically is a high cost an organization needs to pay.

Conclusion

ETL tool comprises of extract, transform and load processes where it helps to generate information from the data gathered from various source systems. The data from the source system can come in any format and can be loaded in any desired format in data warehouses thus ETL tool must support connectivity to all types of these formats.

Recommended Articles

This is a guide to an ETL Process. Here we discuss the introduction, how does it work?, ETL tools and disadvantages respectively. You can also go through our other suggested articles to learn more –