Updated March 23, 2023

Introduction to Ensemble Methods in Machine Learning

Ensemble method in Machine Learning is defined as the multimodal system in which different classifier and techniques are strategically combined into a predictive model (grouped as Sequential Model, Parallel Model, Homogeneous and Heterogeneous methods etc.) Ensemble method also helps to reduce the variance in the predicted data, minimize the biasness in the predictive model and to classify and predict the statistics from the complex problems with better accuracy.

Types of Ensemble Methods in Machine Learning

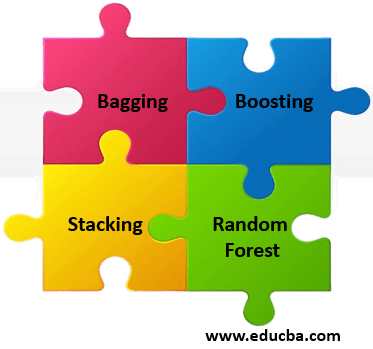

Ensemble Methods help to create multiple models and then combine them to produce improved results, some ensemble methods are categorized into the following groups:

1. Sequential Methods

In this kind of Ensemble method, there are sequentially generated base learners in which data dependency resides. Every other data in the base learner is having some dependency on previous data. So, the previous mislabeled data are tuned based on its weight to get the performance of the overall system improved.

Example: Boosting

2. Parallel Method

In this kind of Ensemble method, the base learner is generated in parallel order in which data dependency is not there. Every data in the base learner is generated independently.

Example: Stacking

3. Homogeneous Ensemble

Such an ensemble method is a combination of the same types of classifiers. But the dataset is different for each classifier. This will make the combined model work more precisely after the aggregation of results from each model. This type of ensemble method works with a large number of datasets. In the homogeneous method, the feature selection method is the same for different training data. It is computationally expensive.

Example: Popular methods like bagging and boosting comes into the homogeneous ensemble.

4. Heterogeneous Ensemble

Such an ensemble method is the combination of different types of classifiers or machine learning models in which each classifier built upon the same data. Such a method works for small datasets. In heterogeneous, the feature selection method is different for the same training data. The overall result of this ensemble method is carried out by averaging all the results of each combined model.

Example: Stacking

Technical Classification of Ensemble Methods

Below are the technical classification of Ensemble Methods:

1. Bagging

This ensemble method combines two machine learning models i.e. Bootstrapping and Aggregation into a single ensemble model. The objective of the bagging method is to reduce the high variance of the model. The decision trees have variance and low bias. The large dataset is (say 1000 samples) sub-sampled (say 10 sub-samples each carries 100 samples of data). The multiple decision trees are built on each sub-sample training data. While banging the sub-sampled data on the different decision trees, the concern of over-fitting of training data on each decision tree is reduced. For the efficiency of the model, each of the individual decision trees is grown deep containing sub-sampled training data. The results of each decision tree are aggregated to understand the final prediction. The variance of the aggregated data comes to reduce. The accuracy of the prediction of the model in the bagging method depends on the number of decision-tree used. The various sub-sample of a sample data is chosen randomly with replacement. The output of each tree has a high correlation.

2. Boosting

The boosting ensemble also combines different same type of classifier. Boosting is one of the sequential ensemble methods in which each model or classifier run based on features that will utilize by the next model. In this way, the boosting method makes out a stronger learner model from weak learner models by averaging their weights. In other words, a stronger trained model depends on the multiple weak trained models. A weak learner or a wear trained model is one that is very less correlated with true classification. But the next weak learner is slightly more correlated with true classification. The combination of such different weak learners gives a strong learner which is well-correlated with the true classification.

3. Stacking

This method also combines multiple classifications or regression techniques using a meta-classifier or meta-model. The lower levels models are trained with the complete training dataset and then the combined model is trained with the outcomes of lower-level models. Unlike boosting, each lower-level model is undergone into parallel training. The prediction from the lower level models is used as input for the next model as the training dataset and form a stack in which the top layer of the model is more trained than the bottom layer of the model. The top layer model has good prediction accuracy and they built based on lower-level models. The stack goes on increasing until the best prediction is carried out with a minimum error. The prediction of the combined model or meta-model is based on the prediction of the different weak models or lower layer models. It focuses to produce less bias model.

4. Random Forest

The random forest is slightly different from bagging as it uses deep trees that are fitted on bootstrap samples. The output of each tress is combined to reduce variance. While growing each tree, rather than generating a bootstrap sample based on observation in the dataset, we also sample the dataset based on features and use only a random subset of such a sample to build the tree. In other words, sampling of the dataset is done based on features that reduce the correlation of different outputs. The random forest is good for deciding for missing data. Random forest means random selection of a subset of a sample which reduces the chances of getting related prediction values. Each tree has a different structure. Random forest results in an increase in the bias of the forest slightly, but due to the averaging all the less related prediction from different trees the resultant variance decreases and give overall better performance.

Conclusion

The multi-model approach of ensemble is realized by deep learning models in which complex data have studied and processed through such different combinations of the classifier to get better prediction or classification. The prediction of each model in ensemble learning must be more uncorrelated. This will keep the bias and variance of the model as low as possible. The model will be more efficient and predict the output under minimum error. The ensemble is a supervised learning algorithm as the model is trained previously with the set of data to make the prediction. In ensemble learning, the number of component classifiers should be the same as class labels to achieve high accuracy.

Recommended Articles

This is a guide to Ensemble Methods in Machine Learning. Here we discuss the Important Types of Ensemble Methods in Machine Learning along with Technical classification. You can also go through our other suggested articles to learn more –