Updated March 20, 2023

Introduction to Restricted Boltzmann machine

Restricted Boltzmann machine is a method that can automatically find patterns in data by reconstructing our input. Geoff Hinton is the founder of deep learning. RBM is a superficial two-layer network in which the first is the visible, and the next is the hidden layer. Every single node in the visible layer is joined to every single node in the hidden layer. A restricted Boltzmann machine is considered restricted because two layers of the same layer do not connect. An RBM is the numerical equivalent of two – way – translator. In the forward path, an RBM receives the input and converts it into a set of numbers that encodes the input. In the backward path, it takes this as a result and processes this set of inputs and translates them in reverse to form the retraced inputs. A super-trained network will be able to perform this reverse transition with high veracity. In two steps, weight and values have a very important role. They permit RBM to decode the interrelationships among the inputs and help the RBM decide which input values are most important in detecting the correct outputs.

Working of Restricted Boltzmann machine

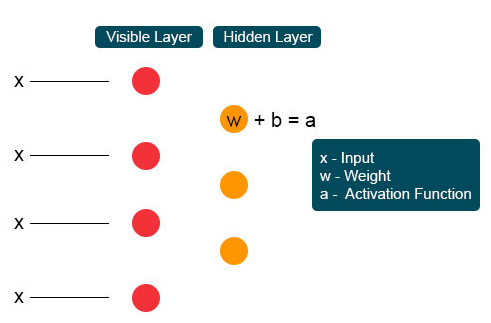

Every single visible node receives a low-level value from a node in the dataset. At the first node of the invisible layer, X is formed by a product of weight and added to a bias. The outcome of this process is fed to activation that produces the power of the given input signal or node’s output.

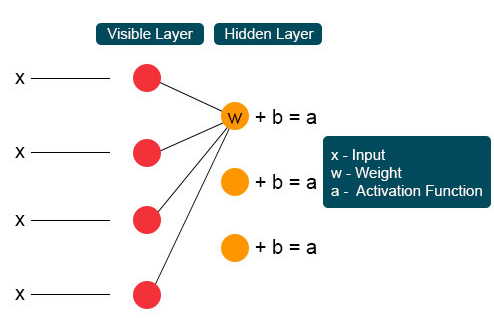

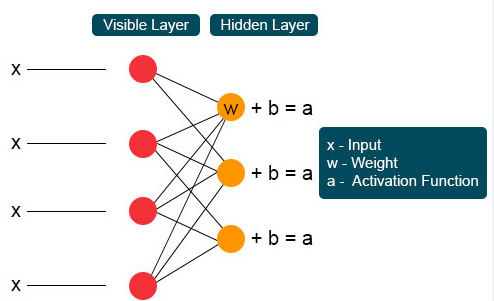

In the next process, several inputs would join at a single hidden node. The individual weight combines each X, the addition of the product is clubbed to values, and again the result is passed through activation to give the node’s output. Each input X is combined by individual weight W. Input X has three weights here at each invisible node, making twelve together. The weight formed in between the layer becomes an array where rows are accurate to input nodes and columns are satisfied to output nodes.

Each invisible node gets four responses multiplied by their weight. The addition of this effect is again added to the value. This acts as a catalyst for some activation process to happen, and the result is again fed to the activation algorithm, which produces every single output for every single invisible input.

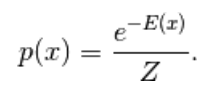

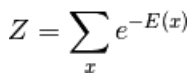

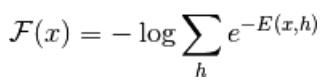

The first model derived here is the Energy-based model. This model associates scalar energy with every configuration of the variable. This model defines the probability distribution through an energy function as follows,

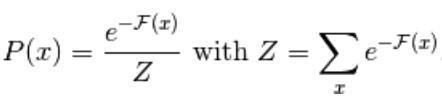

(1)

Here Z is the normalizing factor. It is the partition function in terms of physical systems.

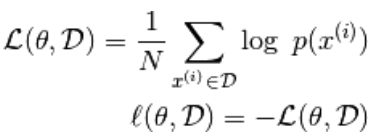

In this Energy-based function follows a logistic regression that the first step will define the log-. the likelihood, and the next one will define loss function as being a negative likelihood.

using the stochastic gradient,  where

where ![]() are the parameters,

are the parameters,

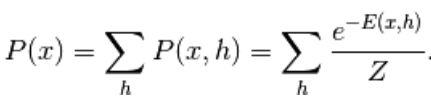

the Energy-based model with a hidden unit is defined as ‘h.’

The Observed part is denoted as ‘x.’

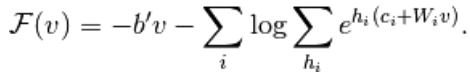

From equation (1), the equation of free energy F(x) is defined as follows

(2)

(3)

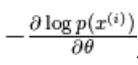

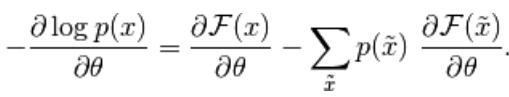

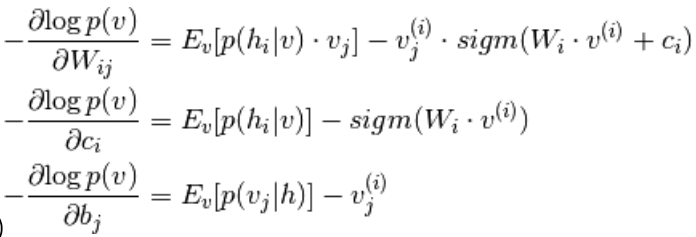

The negative gradient has the following form,

(4)

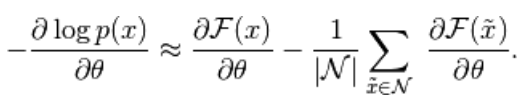

The above equation has two forms, the positive and negative form. Signs of the equations do not represent the terms positive and negative. They show the effect of the probability density. The first part shows the probability of reducing the corresponding free energy. The second part shows the reduced probability of samples generated. Then the gradient is determined as follows,

(5)

Here N is negative particles. In this Energy-Based Model, it is difficult to identify the gradient analytically, as it includes the calculation of ![]()

Hence, in this EBM model, we have a linear observation that cannot accurately depict the data. So in the next model, Restricted Boltzmann Machine, the Hidden layer is more likely to have high accuracy and prevent data loss. The RBM energy function is defined as,

(6)![]()

Here, W is weight connecting between visible and hidden layers. b is offset of the visible layer.c is offset of the hidden layer. by converting to free energy,

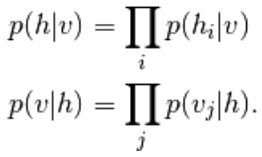

In RBM, the units of the visible and hidden layer are completely independent, which can be written as follows,

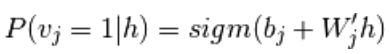

From equation 6 and 2, a probabilistic version of neuron activation function,

(7) ![]()

(8)

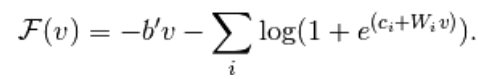

It is further simplified into

(9)

Combining equation 5 and 9,

(10)

Sampling in Restricted Boltzmann machine

Gibbs sampling of the joint of N random variables ![]() is done through a sequence of N sampling sub-steps of the form

is done through a sequence of N sampling sub-steps of the form![]() where

where

contains ![]() the other random variables in

the other random variables in ![]() excluding.

excluding.![]()

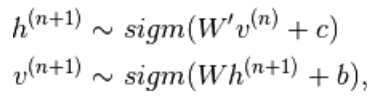

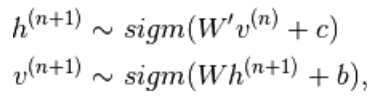

In RBM, S is a set of visible and hidden units. The two parts are independent, which can perform or block Gibbs sampling. Here visible unit performs sampling and gives fixed value to hidden units, simultaneously hidden units provided fixed values to the visible unit by sampling

here,![]() is set of all hidden units.

is set of all hidden units. ![]() An example

An example ![]() is randomly chosen to be 1 (versus 0) with probability,

is randomly chosen to be 1 (versus 0) with probability, ![]() and similarly,

and similarly, ![]() is randomly chosen to be 1 (versus 0) with probability

is randomly chosen to be 1 (versus 0) with probability ![]()

Contrastive divergence

It is used as a catalyst to speed up the sampling process

Since we expect to be true, we expect ![]() the distribution value to be close to P so that it forms a convergence to the final distribution of P

the distribution value to be close to P so that it forms a convergence to the final distribution of P

But Contrastive divergence does not wait for the chain to converge. A sample is obtained only after Gibb’s process, so we set here k = 1 where it works surprisingly well.

Persistent Contrastive divergence

This is another method for approximation sampling form. It is a persistent state for each sampling method; it extracts new samples by simply changing the parameters of K.

Layers of Restricted Boltzmann machine

The restricted Boltzmann machine has two layers, shallow neural networks that combine to form a block of deep belief networks. The first layer is the visible layer, and the other layer is the hidden layer. Each unit refers to a neuron-like circle called a node. The nodes from the hidden layer are connected to nodes from the visible layer. But two nodes of the same layer are not connected. Here the term Restricted refers to no intralayer communication. Each node processes the input and makes the stochastic decision whether to transmit the input or not.

Examples

The important role of RBM is probability distribution. Languages are unique in their letters and sounds. The probability distribution of the letter may be high or low. In English, the letters T, E, and A are widely used. But in Icelandic, the common letters are A and N. we cannot try to reconstruct Icelandic with a weight based on English. It will lead to divergence.

The next example is images. The probability distribution of their pixel value differs for each kind of image. We can consider two images Elephant and Dog; for two input nodes, the forward pass of RBM will generate questions like, should I generate a strong pixel node for elephant node or dog node?. Then backward pass will generate questions like for elephant, how should I expect a distribution of pixels? With joint probability and activation produced by nodes, they will construct a network with joint co-occurrence as big ears, grey non-linear tube, floppy ears, and wrinkle are the elephants. Hence RBM is the process of deep learning and visualization; they form two major biases and act upon their activation and reconstruction sense.

Advantages of Restricted Boltzmann machine

- Restricted Boltzmann machine is an applied algorithm used for classification, regression, topic modeling, collaborative filtering, and feature learning.

- The restricted Boltzmann machine is used for neuroimaging, Sparse image reconstruction in mine planning, and Radar target recognition.

- RBM able to solve imbalanced data problem by SMOTE procedure

- RBM find missing values by Gibb’s sampling, which is applied to cover the unknown values

- RBM overcomes the problem of noisy labels by uncorrected label data and its reconstruction errors

- The problem of unstructured data is rectified by a feature extractor that transforms the raw data into hidden units.

Conclusion

Deep learning is very powerful, which is the art of solving complex problems; it is still room for improvement and complexity to implement. Free variables must be configured with care. The ideas behind the neural network were difficult earlier, but today deep learning is the foot of machine learning and artificial intelligence. Hence RBM gives a glimpse of the huge deep learning algorithms. It deals with the basic unit of composition, which progressively grows into many popular architectures and is used widely in many large-scale industries.

Recommended Article

This has been a guide to the restricted Boltzmann machine. Here we discuss its working, sampling, advantages, and Layers of Restricted Boltzmann machine. You can also go through our other suggested articles to learn more _