Updated October 25, 2023

Introduction to Interleaved Memory

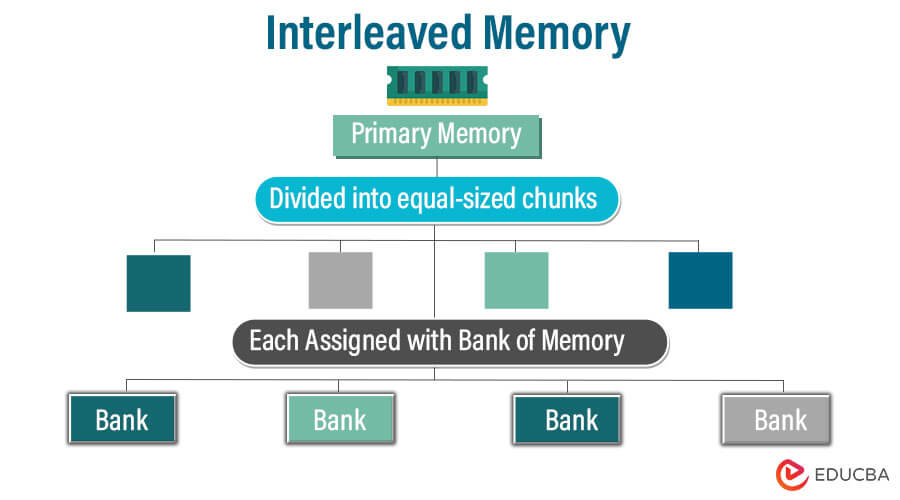

Interleaved memory is a memory management technique that divides the physical memory into equal-sized chunks. Each chunk is assigned to a particular memory bank and then interleaved.

In the context of DRAM(Dynamic Random Access Memory), banks are interleaved to enhance performance by reducing the latency between read and write operations. It provides better memory bandwidth, allowing the processor to access data from different memory banks simultaneously.

Table of Contents

- Introduction

- Why is it used?

- Types

- How does it work?

- Example

- Advantages

- Challenges and Considerations

- Applications in Modern Computing

- Comparison with Other Memory Techniques

- Historical Development

Why is interleaved memory used?

Overcoming Single Module Limitations: Traditional memory systems rely on a single memory module to store and retrieve data, which can create a bottleneck due to limited data access speed. It divides memory into multiple banks, allowing simultaneous access to increase memory bandwidth and enhance data transfer rates.

Reducing Memory Latency: In non-interleaved memory systems, the CPU often waits for a memory module to complete its ongoing operation before accessing data. It mitigates this latency issue by enabling the CPU to access data from different memory modules simultaneously, reducing waiting times and improving overall system performance.

Types of Interleaved Memory

Below are the types, as follows:

1. High-Order Interleaving

High-order interleaving involves dividing memory into blocks and distributing data across these blocks. Each block contains a range of memory addresses, and data is systematically spread across these blocks.

Use case: High-order interleaving is common in systems with limited memory modules and relatively large data word sizes. It ensures even distribution of data across memory banks, which reduces contention and enhances access speed.

Example: Consider a memory system with 8 memory banks and a 32-bit data word. In high-order interleaving, the data word is distributed across multiple levels. The first 8 bits might go to the first level of banks (Banks 1 to 8), the next 8 bits to the second level (Banks 9 to 16), and so on. This finer-grained interleaving allows for highly parallel memory access, making it suitable for high-performance computing tasks with substantial memory access demands.

2. Low-Order Interleaving

Low-order interleaving distributes data word by word across memory modules. Each memory module receives one part of each data word, and the system accesses them sequentially to assemble the complete data word.

Use case: Low-order interleaving is suitable for systems with more memory modules, ensuring that data access is distributed efficiently across modules. It is often used in high-performance computing environments where parallel access to smaller data units is critical for system speed.

Example: Suppose you have the same memory system with 8 banks and a 32-bit data word. In low-order interleaving, the first 8 bits might go to the first bank (Bank 1), the next 8 bits to the second bank (Bank 2), and so on until you’ve covered all 8 banks. Then, the cycle repeats. This results in coarser granularity than high-order interleaving, making it suitable for systems with lower memory access requirements, like basic personal computers or less data-intensive applications.

Comparison of High-Order Interleaving and Low-Order Interleaving

| Feature | High-order interleaving | Low-Order Interleaving |

| Address mapping | The most significant bit of the address selects the memory bank | The least significant bits of the address select the memory bank. |

| Consecutive memory access | Consecutive memory addresses are mapped to different memory modules. | Consecutive memory addresses are mapped to the same memory module. |

| Block access | Well-suited for block access. | Not well-suited for block access. |

| Memory access latency | Lower memory access latency for non-contiguous memory accesses. | Higher memory access latency for non-contiguous memory accesses. |

| Memory throughput | Higher memory throughput for non-contiguous memory accesses. | Lower memory throughput for non-contiguous memory accesses. |

How does Interleaved Memory Work?

It divides memory into multiple modules and spreads memory addresses evenly across them. This allows various memory accesses to occur simultaneously, improving overall memory performance.

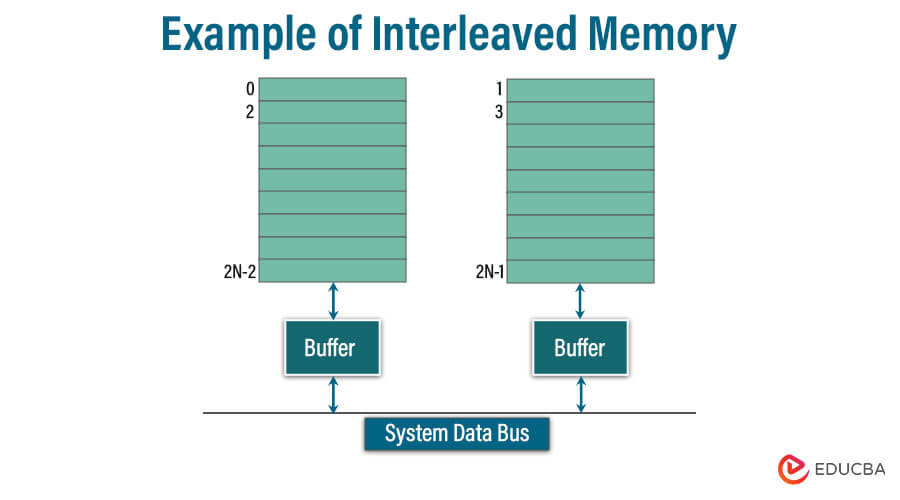

For example, we have a system with two memory modules and want to access memory address 0. The memory controller will first look at the address’s least significant bit (LSB). In this case, the LSB is 0, so the memory controller will know that address 0 is located in memory module 0.

The memory controller will then send a signal to memory module 0 to access address 0. While memory module 0 accesss address 0, the memory controller can send a signal to memory module 1 to access address 1. This allows the memory controller to access two memory addresses simultaneously.

Example of Interleaved Memory

Assume a computer system with 4 GB of memory (RAM) installed. This system utilizes a 4-way interleave memory configuration. In such a configuration, data is divided into four parts, each stored in a different memory module. Data interleaving allows the memory system to process multiple data streams simultaneously, improving performance.

In this example, the memory is divided into four banks, each with 1 GB of memory. The address bus is used to select the appropriate bank. The memory controller uses the lower-order address bits (A0, A1, and A2) to select the word within a bank. The higher-order address bits (A3, A4, and A5) are used to select the bank.

For example, if the address bus contains the value 10, the memory controller will select Bank 2 and read the word at address 2. This is because the lower order address bits (A0, A1, and A2) are equal to 0, 1, and 0, corresponding to address 2 in Bank 2. The higher-order address bits (A3, A4, and A5) equal 1, 0, and 1, corresponding to Bank 2.

Advantages

- Higher Bandwidth: It can achieve higher bandwidth than non-interleaved memory, resulting in faster data transfers between the memory and the processor.

- Reduced Latency: In an interleaved memory system, memory access latency can be reduced, as the memory controller can simultaneously service requests from different memory banks.

- Better Cache Utilization: It can help utilize cache memory more effectively. With the right cache block size, this system can reduce cache misses and improve overall performance.

- Increased Memory Capacity: You can use it to increase the overall capacity of a memory system, as it enables you to treat multiple memory modules as a single memory module.

- Support for Simultaneous Multithreading (SMT): In a multicore processor, interleaved memory can allow multiple threads to access the memory simultaneously, leading to better performance in multithreaded applications.

Challenges and Considerations

Challenges:

- Cache Management: Managing the cache is challenging with interleaved memory because of the cache-line conflicts.

- Hardware Design: Designing hardware capable of accessing and processing interleaved memory is challenging. It requires specialized circuitry to achieve this functionality.

- Access Times: The access times to memory units in interleaved memory can vary, leading to performance inconsistencies.

Considerations:

- Trade-off: One potential advantage is that it can increase cache locality, potentially resulting in improved cache hit rates. However, the trade-off between increased cache locality and increased cache line conflicts will depend on the particular memory organization and access patterns.

- Cache Invalidation: If memory access patterns are non-stationary or do not follow regular patterns, interleaved memory may provide little to no performance benefit compared to non-interleaved memory. In such cases, the overhead of interleaved memory may be outweighed by the increased complexity of the memory hierarchy and the increased difficulty of cache invalidation.

- Design Flexibility: Incorporating interleaved memory into a system may limit design flexibility. For example, using this may restrict the choice of memory organizations, which can influence system performance.

Applications in Modern Computing

1. CPU Cache Hierarchies

CPU cache hierarchies have employed IM to enhance performance. IM allows the caches to interleave across different memory channels, thereby increasing cache associativity. This improved associativity can help to reduce cache conflicts and improve cache hit rates.

2. Data Center Systems

Data center systems employ it to facilitate scalable, high-performance, and cost-effective storage solutions. By interleaving memory across multiple channels, data center systems can achieve improved performance and higher capacity than non-interleaved memory systems.

3. Network Attached Storage (NAS) Systems

NAS systems, such as RAID storage devices, have also adopted this technology to enhance performance. Interleaved memory in NAS systems can improve cache hit rates and overall system performance by enabling caches to access memory simultaneously across different memory channels.

4. Accelerators and Co-Processors

It can also be found in accelerators and co-processors, such as graphics processing units (GPUs) and field-programmable gate arrays (FPGAs). These systems often utilize large memory spaces to store data and programs, and interleaved memory can help optimize memory access and improve overall system performance.

5. Emerging Technologies

It has been increasingly applied in emerging technologies, such as high-performance computing (HPC), big data analytics, and machine learning (ML). These advanced computing environments often require significant amounts of memory to store and process large volumes of data, and interleaved memory can help to provide high-speed, efficient memory access.

Comparison with Other Memory Techniques

| Feature | Interleaved memory | Non-interleaved memory | Virtual memory | Cache memory |

| Type of memory | Physical memory | Physical memory | Combination of physical and virtual memory | Physical memory |

| Implementation | Divides physical memory into multiple banks | Single physical memory bank | Uses a mapping table to translate virtual addresses into physical addresses | Uses a small, fast memory to store frequently accessed data |

| Performance | Improves memory throughput for contiguous memory accesses | Lower memory throughput | Improves performance for programs that are larger than physical memory | Improves performance for frequently accessed data |

| Cost | More expensive than non-interleaved memory | Less expensive than interleaved memory | More expensive than non-interleaved memory | It is more expensive than the main memory |

| Complexity | More complex than non-interleaved memory | Less complex than interleaved memory | More complex than non-interleaved memory | More complex than the main memory |

| Usage | Used in high-performance systems | Used in general-purpose systems | Used in general-purpose systems | Used in all types of systems |

Historical Development

| 1960s | Early interleaved memory systems were developed using magnetic core memory |

| 1970s | First, practical implementations of interleaved memory were introduced in mainframe computers. |

| 1980s | Interleaved memory becomes a common feature in high-performance workstations and servers. |

| 1990s | Gaming systems, including the Super Nintendo Entertainment System (SNES), utilize interleaved memory to enhance gaming experiences. |

| 2000s | Interleaved memory continues to be a fundamental component of various computing environments, including data centers. |

| 2010s | Ongoing development and optimization of interleaved memory to address the increasing memory demands of modern applications and systems. |

Conclusion

IM, a significant computer memory innovation, enhances system performance by distributing data across modules to reduce contention. Its history dates back to the 1960s, with broad adoption in modern systems. While beneficial, it adds complexity and requires careful integration with other memory techniques, ensuring efficient data access in evolving technology.

Recommended Articles

We hope this EDUCBA information on “Interleaved Memory” benefited you. You can view EDUCBA’s recommended articles for more information.