Updated March 20, 2023

What is XGBoost Algorithm?

XGBoost or the Extreme Gradient boost is a machine learning algorithm that is used for the implementation of gradient boosting decision trees. Why decision trees? When we talk about unstructured data like the images, unstructured text data, etc., the ANN models (Artificial neural network) seems to reside at the top when we try to predict. While when we talk about structured/semi-structured data, decision trees are currently the best. XGBoost was basically designed for improving the speed and performance of machine learning models greatly, and it served the purpose very well.

Working of XGBoost Algorithm

The XGBoost is having a tree learning algorithm as well as linear model learning, and because of that, it is able to do parallel computation on a single machine. This makes it 10 times faster than any of the existing gradient boosting algorithms.

The XGBoost and the GBMs (i.e. Gradient Boosting Machines) uses tree methods by using the gradient descent architecture. The area where XGBoost leaves the other GBMs behind is the area of system optimization and enhancements over the algorithms.

Let us see those in detail:

1. System Optimization

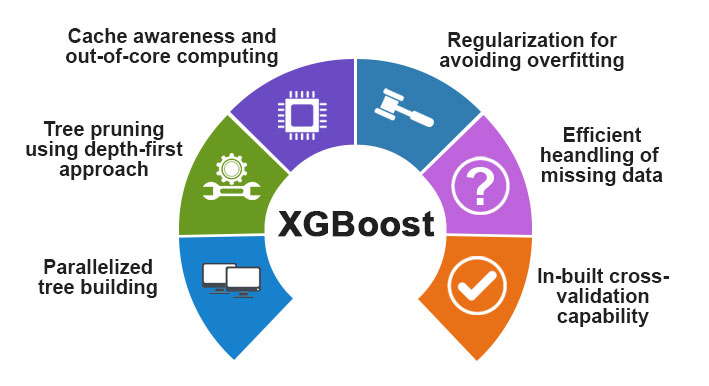

- Tree Pruning – The XGBoost algorithm uses the depth-first approach, unlike the stopping criterion for tree splitting used by GBMS, which is greedy in nature and it also depends upon the negative loss criterion. The XGBoost instead uses the max depth feature/parameter, and hence it prunes the tree in a backward direction.

- Parallelization – The process of sequential tree building is done using the parallelized implementation in the XGBoost algorithm. This is made possible due to the outer and inner loops that are interchangeable. The outer loop lists the leaf nodes of a tree, while the inner loop will calculate the features. Also, in order for the outer loop to start, the inner loop must get completed. This process of switching improves the performance of the algorithm.

- Hardware Optimization – Hardware optimization was also considered during the design of the XGBoost algorithm. Internal buffers are allocated for each of the threads to store the gradient statistics.

2. Algorithmic Enhancements

- Awareness of Sparsity – XGBoost is known to handle all different types of sparsity patterns very efficiently. This algorithm learns the nest missing value by seeing the training loss.

- Regularization – In order to prevent overfitting, it corrects more complex models by implementing both the LASSO (also called L1) and Ridge regularization (also called L2).

- Cross-Validation –It is having built-in cross-validation features that are being implemented at each iteration in the model creation. This prevents the need to calculate the number of boosting iterations needed.

- Distributed Weighted Quantile Sketch – It uses the distributed weighted quantile sketch to get the optimal number of split points among the weighted datasets.

Features of XGBoost

Although XGBoost was designed for improving the speed and performance of machine learning models greatly, it does offer a good number of advanced features as well.

Model Features:

The features such as that of a sci-kit learn regularization, and R language implementation is supported by XGBoost.

The main gradient boosting methods that are supported are:

Let see both of them:

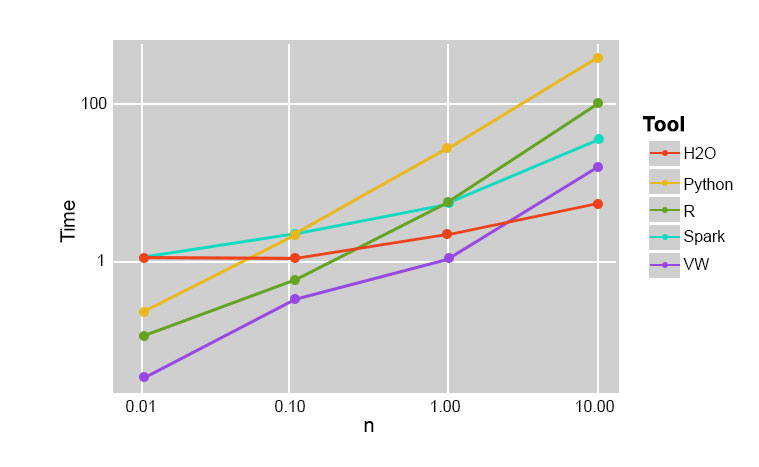

1. Execution Speed

When we compare XGBoost with other gradient boosting algorithms, XGBoost turns out to be really fast, approximately 10 times faster than other implementations.

Szilard Pafka performed some experiments that were targeted to evaluate the execution speed of different random forest implementation algorithms.

Below is a snapshot of the results of the experiment:

It turned out that XGBoost was the fastest. More reading can be found here.

2. Model Performance

When we talk about unstructured data like the images, unstructured text data, etc., the ANN models (Artificial neural network) seems to reside at the top when we try to predict. While when we talk about structured/semi-structured data, decision trees are currently the best and when implemented using the XGBoost, no other boosting algorithm can beat this as of now.

Algorithm used by XGBoost

- The XGBoost algorithm uses the gradient boosting decision tree algorithm.

- The gradient boosting method creates new models that do the task of predicting the errors and the residuals of all the prior models, which then, in turn, are added together and then the final prediction is made.

Conclusion

In this, we saw about the XGBoost algorithm that is used for machine learning. Then we saw the working of this algorithm, its main features and why it is a perfect choice for implementing gradient boosting decision trees.

Recommended Articles

This has been a guide to XGBoost Algorithm. Here we discuss the basic concept, features, and working of an algorithm in XGBoost. You may also look at the following articles to learn more –