Updated April 7, 2023

Introduction to PyTorch Loss

Basically, Pytorch provides the different functions, in which that loss is one of the functions that are provided by the Pytorch. In deep learning, we need expected outcomes but sometimes we get unexpected outcomes so at that time we need to guess the gap between the expected and predicted outcomes. At that time, we can use the loss function. Normally the Pytorch loss function is used to determine the gap between the prediction data and provided data values. In another word, we can say that the loss function provides the information about the algorithm model that means how it is far from the expected result or penalty of the algorithm.

What is PyTorch loss?

Loss functions are utilized to check the blunder between the expected yield and they give target esteem. A misfortune works let us know how far the calculation model is from understanding the normal result. The word ‘Loss’ signifies the punishment that the model gets for neglecting to yield the ideal outcomes.

Loss functions or cost work is a capacity that maps an occasion or upsides of at least one factor onto a genuine number naturally addressing a few “costs” related to the occasion. An advancement issue tries to limit a loss function. A genuine capacity is either a loss function or its negative (in explicit areas, differently called an awarded work, a benefit work, a utility capacity, a wellness work, and so forth), in which case it is to be expanded

Now let’s see the classification of loss function as follows.

1. Regression loss function: It is one of the classifications of the loss function and it is used when we need to predict the continuous value, for example, we can consider age.

2. Classification loss function: It is used when we need to predict the final value of the model at that time we can use the classification loss function. For example, email.

3. Ranking loss function: If we need to calculate the relative distance between the inputs at that time we can use this classification. For example, ranking of the product according to their relevance.

PyTorch loss two parameters

Now let’s see the two parameters of loss function as follows.

1. Predicted Result

2. Final Value (Target)

Explanation

These functions will decide your model’s presentation by contrasting its anticipated yield and the normal yield. Assuming the deviation between the predicted result and the final value is extremely enormous; the misfortune will be exceptionally high.

If the deviation is little or the qualities are almost indistinguishable, it’ll yield an extremely low loss value. Accordingly, you really want to utilize a specified loss function that can punish a model appropriately when it is prepared on the given dataset.

Loss function changes depending on the issue articulation that your calculation is attempting to address.

How to add PyTorch loss?

Now let’s see how we can add the loss function with an example as follows. First, we need to import the packages that we require as follows.

import torch

import torch.nn as nnAfter that, we need to define the loss function as follows.

Loss = nn, specified function name()Explanation

We can use the above-mentioned syntax to add the loss function into the model to identify the gap between predicted outcomes and target the outcome.

import torch

import torch.nn as nn

data_input = torch.randn(4, 3, requires_grad=True)

t_value = torch.randn(4, 3)

loss_f = nn.L1Loss()

result = loss_f(data_input, t_value)

result.backward()

print('input values: ', data_input)

print('target values: ', t_value)

print('Final Outcomes: ', result)Explanation

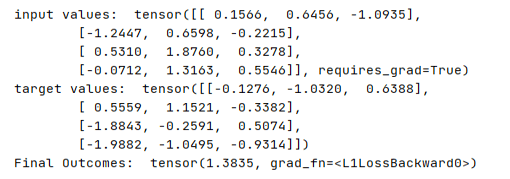

In the above example, we try to implement the loss function in PyTorch as shown. The final result of the above program we illustrated by using the following screenshot as follows.

Types of loss functions in PyTorch

Now let’s see different types of the loss function in Pytorch with examples for better understanding as follows.

1. Mean Absolute Error (L1 Loss Function): It is also called as L1 loss function, it is used to calculate the average sum of the absolute difference between the predicted outcomes and actual outcome. It is also used to check the size of the error. The L1 expression is shown below.

Loss (A, B) = |A – B|

Where,

A is used to represent the actual outcome and B is used to represent the predicted outcome.

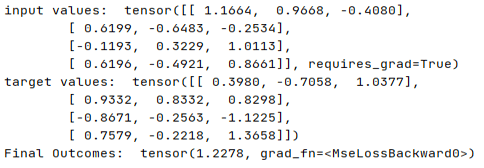

2. Mean Squared Error Loss Function: It is also called as L2 loss function and it is used to calculate the average of the squared differences between the predicted outcome and actual outcome. The outcome of this function is always positive. The expression of L2 is shown below as follows.

Loss (A, B) = (A – B)2

Where,

A is used to represent the actual outcome and B is used to represent the predicted outcome.

Example

import torch

import torch.nn as nn

data_input = torch.randn(4, 3, requires_grad=True)

t_value = torch.randn(4, 3)

loss_f = nn.MSELoss()

result = loss_f(data_input, t_value)

result.backward()

print('input values: ', data_input)

print('target values: ', t_value)

print('Final Outcomes: ', result)Explanation

The final result of the above program we illustrated by using the following screenshot as follows.

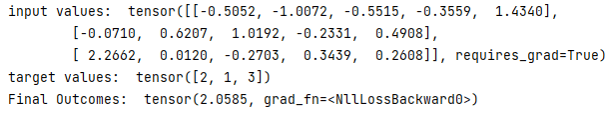

3. Negative Log-Likelihood Loss Function: It is used to compute the normalized exponential function for every layer that is the Softmax function. It also includes the Nll loss model to make the correct prediction.

Example

import torch

import torch.nn as nn

data_input = torch.randn(3, 5, requires_grad=True)

t_value = torch.tensor([2, 1, 3])

loss_f = nn.LogSoftmax(dim=1)

nll_f = nn.NLLLoss()

result = nll_f(loss_f(data_input), t_value)

result.backward()

print('input values: ', data_input)

print('target values: ', t_value)

print('Final Outcomes: ', result)Explanation

The final result of the above program we illustrated by using the following screenshot as follows.

4. Cross-Entropy Loss Function: It is used to derive the result between two different probabilities for any random variables. The expression of this function is as follows.

Loss (A, B) = - ∑ A log BWhere,

A is used to represent the actual outcome and B is used to represent the predicted outcome.

5. Hinge Embedding Loss Function: By using this function we can calculate the loss between the tensor and labels. Hinge function gives more error value when the difference exists between the predicted outcome and actual outcome.

6. Margin Ranking Loss Function: It is used to derive the criterion of the predicted outcome between the inputs.

7. Triplet Margin Loss Function: It is used to calculate the triplet loss of the model.

Conclusion

We hope from this article you learn more about the Pytorch loss. From the above article, we have taken in the essential idea of the Pytorch loss and we also see the representation and example of Pytorch loss. From this article, we learned how and when we use the Pytorch loss.

Recommended Articles

We hope that this EDUCBA information on “PyTorch Loss” was beneficial to you. You can view EDUCBA’s recommended articles for more information.