Updated June 1, 2023

Definition of PyTorch

PyTorch is an open-source machine learning library developed using Torch library for python programs. It was developed by Facebook’s AI Research lab and released in January 2016 as a free and open-source library mainly used in computer vision, deep learning, and natural language processing applications. Programmers can quickly build a complex neural network using PyTorch as it has a core data structure, Tensor, and multi-dimensional array like Numpy arrays. PyTorch use is increasing in current industries and the research community as it is flexible, faster, and easy to get the project up and running, due to which PyTorch is one of the top deep learning tools.

Why do we Need PyTorch?

The PyTorch framework can be seen as the future of the deep learning framework. Many deep learning frameworks are being introduced; the most preferred ones are Tensorflow and PyTorch. Still, PyTorch is emerging as a winner due to its flexibility and computation power. For machine learning and Artificial Intelligence enthusiast, PyTorch is easy to learn and useful for building models.

Here are some of the reasons why developers and researchers learn PyTorch:

1. Easy to Learn

The developer community has brilliantly documented PyTorch, continuously working to improve its structure, which remains similar to traditional programming. Due to this, it is easy to learn for the programmer and non-programmer.

2. Developers’ Productivity

It has an interface with Python and different powerful APIs and can be implemented in Windows or Linux OS. With some programming knowledge, a developer can improve their productivity as most of the tasks from PyTorch can be Automated.

3. Easy to Debug

It can use debugging tools like pdb and ipdb tools of python. As PyTorch develops a computational graph at runtime, programmers can use Pythons IDE PyCharm for debugging.

4. Data Parallelism

It can distribute the computational tasks among multiple CPUs or GPUs. This is possible using the data parallelism (torch.nn.DataParallel) feature, which wraps any module and helps us do parallel processing.

5. Useful Libraries

It has a large community of developers and researchers who built tools and libraries to extend PyTorch. This community helps develop computer vision, reinforcement learning, and NLP for research and production purposes. Some of the popular libraries are GPyTorch, BoTorch, and Allen NLP. The rich set of powerful APIs helps to extend the PyTorch framework.

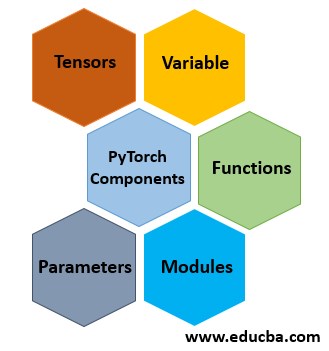

PyTorch Components

Let’s look into the five major components of PyTorch:

1. Tensors: Tensors are the Multi-Dimensional array similar to the Numpy array, and Tensors are available in Torch as a torch. IntTensor, torch.FloatTensor, torch.CharTen etc.

2. Variable: A variable is a wrapper around tensors to hold the gradient. The variable is available under torch.autographed as a torch.autograd.Variable.

3. Parameters: Parameters are a wrapper around the variable. It is used when we want a tensor as a parameter of some module which is not possible using a variable as it is not a parameter, or Tensors it does not have a gradient so that we can use parameters under the torch.nn as a torch.nn.Parameter.

4. Functions: Functions perform the transform operations and do not have a memory to store any state. Log functions will give output as log value, and linear layer can not function as it stores weight and biases value. Examples of functions are torch.log, torch.sum, etc., and functions are implemented under the torch.nn.functional.

5. Modules: Module used under Torch as a torch.nn.Module is the base class for all neural networks. A module can contain other modules, parameters, and functions. It can store state and learnable weights. Modules are types of transformation that can be implemented as torch.nn.Conv2d, torch.nn.Linear etc.

Advantages and Disadvantages of PyTorch

Given below are some advantages and disadvantages mentioned:

Advantages

- It is easy to learn and simpler to code.

- Rich set of powerful APIs to extend the Pytorch Libraries.

- It has computational graph support at runtime.

- It is flexible, faster, and provides optimizations.

- It has support for GPU and CPU.

- Easy to debug using Pythons IDE and debugging tools.

- It supports cloud platforms.

Disadvantages:

- It was released in 2016, so it’s new compared to others, has fewer users, and is not widely known.

- Absence of monitoring and visualization tools like a tensor board.

- Other frameworks have a larger developer community compared to this one.

Applications of PyTorch

1. Computer Vision

Developers use a convolutional neural network (CNN) for various applications, including image classification, object detection, and generative tasks. Using PyTorch, a programmer can process images and videos to develop a highly accurate and precise computer vision model.

2. Natural Language Processing

Developers can use it to develop a language translator, language modeling, and chatbot. It uses RNN, LSTM, etc. Architecture to develop natural language processing models.

3. Reinforcement Learning

It is used to develop Robotics for automation, Business strategy planning or robot motion control, etc. It uses Deep Q learning architecture to build a model.

Conclusion

It is one of the primary frameworks for deep learning, Natural Language processing, etc. In the future, there will be more and more researchers and developers will be learning and implementing PyTorch. It has a syntax similar to any other standard programming language; hence, it is to learn and transition into AI or Machine learning engineering.

Recommended Articles

This is a guide to What is PyTorch? Here we discuss why we need PyTorch and its components, along with applications, advantages, and disadvantages. You can also go through our other suggested articles to learn more –