Updated March 8, 2023

Definition of Naive Bayes in Machine Learning

Naive bayes in machine learning is defined as probabilistic model in machine learning technique in the genre of supervised learning that is used in varied use cases of mostly classification, but applicable to regression (by force fit of-course!) as well. The reason of putting a naïve in front of the algorithm name is because it assumes that the features that goes into the model are independent of each other or in other words, changes done to one variable doesn’t affect any others. Though this assumption is a strong one and happens to be a basic one and, the effect of the variable is present. Due to this algorithm, Naïve Bayes happens to be a simple, yet very powerful algorithm. Due to its lesser complexity this is the go-to choice for any of the algorithms where in one either has to respond to a request quickly or one needs to perform some calculation in order to provide some basic yet powerful insights from the data!

How Naive Bayes works in machine learning?

In the introduction we have understood that Naïve Bayes is a simple algorithm that assumes the factors that are a part of the analysis are independent to each other and that the answer to Naïve in its name. but what about the other word Bayes. In order to have a deeper understanding of Naïve Bayes algorithm, we would first understand the Bayes theorem in conditional probability.

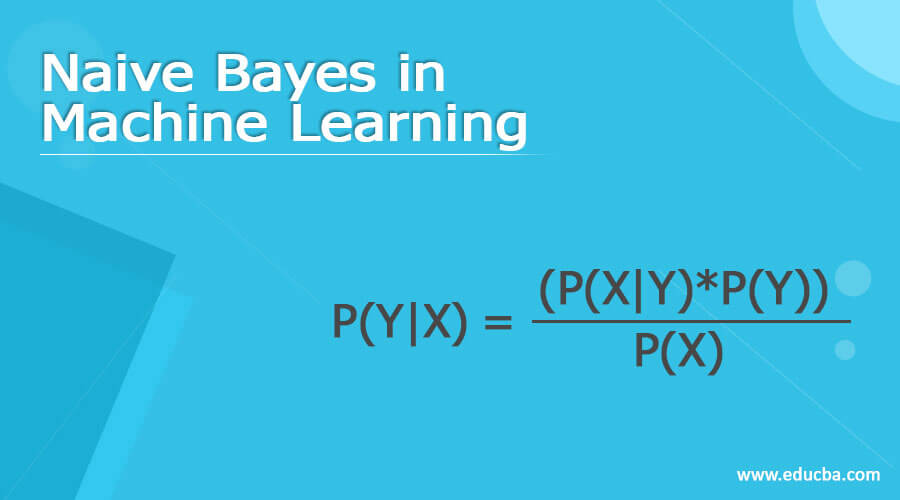

Let us understand the Naïve Bayes with an example. Let us take the very generic example, rolling a dice. What is the probability that we would get a number 2? Since there is one “2” in the dice, and total 6 possibilities, the probability is 1/6. Now, let us enhance the example a bit more. We are at a guessing game, where one has to ask some questions and on the basis of that, we have to guess the number and pattern on the card. At first, we would have 4 possibilities and only one is true, and hence the probability is ¼. Let say we have guessed the color of the pattern, now to guess the pattern there are only 2 possibilities present and one of them has to be true and hence the probability of us being correct is ½ which is obviously more than ¼. Did you notice the difference in the statements? In the case of dice, there is no condition, whereas in case of cards the condition is that the color is already known. Thus, probability of an event to occur when a condition is given is known and conditional probability and the theorem that dictates this is known as Bayes theorem. The mathematical equation of the same is given as:

P(Y|X)=(P(X|Y)*P(Y))/P(X)

And it is read as probability of Y to happen given that X has happened is equal to probability of X to happen such that Y has occurred multiplied with probability of Y to occur upon the probability of X to happen. Here, P(Y|X) is known as Posterior probability, P(X|Y) is known s a likelihood probability, P(Y) is termed as prior probability and P(X) is the probability of evidence.

Now keeping the above theorem in mind, let us see the working of Naïve Bayes. In real-world examples, we might have multiple X variables in the data and with the statement that multiple X variables are independent we can substantiate that the probability will follow a multiplicative relation to finding the probability. The equation will look something like:

P(Y/(X_1,X_2,X_3,X_4…X_n,))=(P(X_1 | Y)*P(X_2 | Y)*P(X_3 | Y)*…*P(X_n | Y))/(P(X_1 )*P(X_2 )*P(X_3 )*…*P(X_n ) )

With setting of this context, now let us take an example to fully understand the working of Naïve Bayes through an example in classification. The working takes place in 3 steps. Let us look at the steps first and then take one example and get a hands-on understanding of the working.

At first, the frequency table is built on the basis of categories in the features. This frequency table is based on the category of the features that go into the model. For example, a column has 3 categories; all these categories are taken, and corresponding frequency of occurrence is tabulated.

In the next step, the corresponding probabilities are calculated against the categories and their corresponding frequency of the target variable.

Finally, the above calculation using the formula of Bayes’ theorem is used to calculate the posterior probability when any new dataset is sent for prediction.

It is now time to look at the steps with an example. Let us see if a person is likely to buy a gadget given the income status:

Original Data:

| Income Status | Gadget Bought? |

| Low | Yes |

| Mid | Yes |

| High | Yes |

| High | Yes |

| Mid | No |

| Low | No |

| Mid | Yes |

| High | Yes |

| Low | No |

| Mid | No |

| Mid | Yes |

| Low | No |

| High | Yes |

| High | Yes |

Step 1: Frequency table creation

| Income Group | Yes | No |

| High | 5 | 0 |

| Mid | 3 | 2 |

| Low | 1 | 3 |

| Total | 9 | 5 |

Step 2: Probability calculation of the likelihood income groups

| Income Group | Yes | No | |

| High | 5 | 0 | 5/14 = 0.35 |

| Mid | 3 | 2 | 5/14 = 0.35 |

| Low | 1 | 3 | 4/14 = 0.3 |

| Total | 9/14 = 0.64 | 5/14 = 0.36 |

Step 3: Now whenever a new dataset comes, we can tell the corresponding probability that whether the person will buy the gadget or not by using the posterior probability formulae. Let us say that the new data is with income group as Mid. We would need to find out if the person will buy a gadget or not.

P(Yes|Mid) = P(Mid|Yes)*P(Yes)/P(Mid)

Here,

P(Mid|Yes) = 3/9 [Total frequency of Mid in Yes = 3, and total frequency in Yes = 9]

P(Yes) = 9/14

P(Mid) = 5/14

Substituting the values, we get P(Yes|Mid) = (3/9*9/14)/(5/14) = 3/5

Similarly, for P(No|Mid) = 2/5

So, we can conclude that if the person is of Income Category Mid, he has a higher propensity to buy the gadget.

Conclusion

In this article though Naïve Bayes with an example to understand the core learning of algorithms. This simple yet powerful method gives us propensity score of a class happening or not and the concept can be extended to multinomial classification easily although using it in regression might be a force fit!

Recommended Articles

This is a guide to Naive Bayes in Machine Learning. Here we discuss Definition, How Naive bayes works in machine learning? with methods respectively. You may also have a look at the following articles to learn more –