Updated March 15, 2023

Introduction to Scikit Learn KMeans

The following article provides an outline for Scikit Learn KMeans. Kmeans comes under the unsupervised learning algorithm of machine learning; commonly kmeans algorithm is used for partitioning the dataset as per our requirement where we can say that every data point belongs to only one group. That means we can say it is unlabeled data or without defined categories. Usually, the main goal of this algorithm is to find the different groups in the data.

Key Takeaways

- It is very easy and simple to implement.

- The kmeans algorithm depends on the initial values for more accurate results.

- We can quickly scale as per our requirements if we have a huge dataset.

- We can also do the manual implementation of Kmeans algorithm.

Overview of Scikit Learn KMeans

KMeans is a sort of solo realization utilized when you have unlabeled information (i.e., information without characterized classifications or gatherings). This calculation aims to track down bunches in the information, with the number of gatherings addressed by the variable.

K-implies bunching is a sort of unaided realization utilized when you have unlabeled information (i.e., information without characterized classifications or gatherings). This calculation aims to track down bunches in the information, with the number of gatherings addressed by the variable K. The calculation works iteratively to dole out every information highlighting one of K gatherings in light of the given elements. Information focuses are bunched given component likeness. The centroids of the K bunches can utilize to mark new information. Marks for the preparation information (every information point is doled out to a solitary group)

Rather than characterizing bunches before taking a gander at the information, bunching permits you to find and dissect the gatherings that have shaped naturally. The “Picking K” area below portrays how the number of gatherings is still in the air. Every centroid of a bunch is an assortment of component values that characterize the subsequent gatherings. Inspecting the centroid, including loads, can be utilized to decipher what sort of gathering each bunch addresses subjectively.

How Scikit Learn Clustering KMeans work?

Let’s see how clustering works in kmeans:

1. Load the Data

First, we need to load the data we want, So we can easily read and view the required data with the help of different python libraries like pandas. In the next step, we need to Preprocess the data. Before passing the information into any model, it is important to ensure it is perfect. At the point when the contribution to the model is trash, the result is likewise trash.

In the third step, we can remove the unnecessary columns per our requirement. The sections are the elements of every one of the melodies whose names and specialists are given. It is critical to know this information outline to check each component’s potential qualities. That is because they influence the assembly of the model. It is wiser to reject the segments that don’t check out concerning them as group elements. In this step, we can fetch the data as per our requirements.

2. Now Apply the Transformation

The distinctions in the scopes of the elements make a predisposition in that a component that takes huge qualities has a higher weight, and, consequently, a higher effect on the calculation. We need to let the model treat every one of the highlights similarly without inclinations. That’s what to do; we want to standardize the information. In the next step, we need to select the number cluster per our requirement to use the Inertia or the Silhouette coefficient method. In the last step, we need to apply the KMeans Clustering method, or we can say that KMeans functions.

Scikit Learn KMeans Data

Data naming is the cycle of taking crude data and adding at least one significant piece of data to it, similar to whether a picture shows the essence of an individual. As you can envision, data marking is a tedious errand, so most data shows up unlabeled. Luckily, a few measurable bunching procedures have been formed that bunch data into bunches given comparative qualities. Once bunched, data can be used to acquire significant experiences and train administered AI calculations.

Example:

Code:

from sklearn.datasets import make_blobs

from sklearn.cluster import KMeans

from matplotlib import pyplot as plt

import numpy as np

import seaborn as sns

sns.set_style("darkgrid")

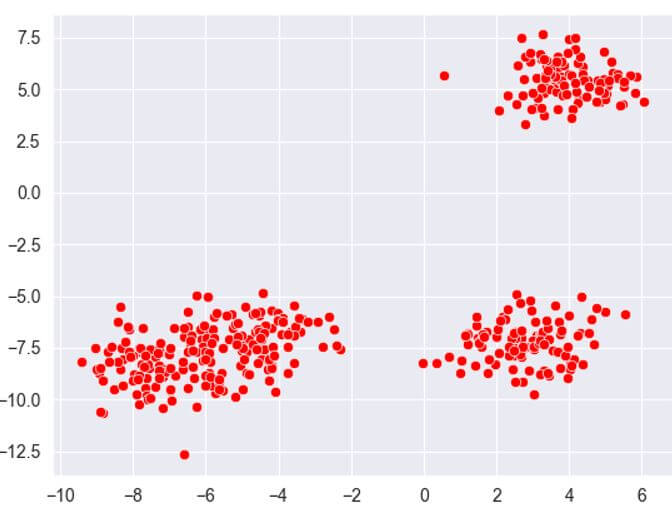

X, y = make_blobs(n_samples=400, centers=4, cluster_std = 1.01)

sns.scatterplot(x=X[:,0], y=X[:,1], c =["red"])Explanation:

- After execution, we get the following result as shown in the below screenshot.

Output:

Now add this code for KMeans as below:

Code:

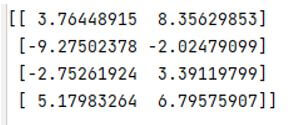

samplemodel = KMeans(n_clusters=4)

samplemodel.fit(X)

print(samplemodel.cluster_centers_)Output:

Scikit Learn KMeans Parameters (Clustering)

Given below are the scikit learn kmeans parameters:

- number_of_clusters: int, default=8: This is nothing but used to show the number of clusters as well as how many centroids are to be generated.

- number_of _initint, default=10: It is used to determine how many times we need to run the Kmeans algorithm with different centroid values.

- maximum_itr: int, default=300: It is used to show the maximum number of iterations of the Kmeans algorithm for a single execution.

- tol: float, default=1e-4: During the execution of consecutive iterations we need to define the relative tolerance.

- verbose: We can apply verbosity mode whenever required.

- random_case: With the help of this parameter we can define the random number of generations.

- copy value: When we decide to pre-compute distance numerically should be accurate, if the value of copy is true then we cannot modify the original content and if the value of copy is false then we can modify the content as per our requirement.

FAQ

Given below are the FAQs mentioned:

Q1. What is clustering in kmeans?

Answer:

Basically, clustering is used to find the centroids from the dataset, in Kmeans algorithm we need to find out the nearest centroid values from the dataset so we can pick up random values that we want.

Q2. What is the main advantage of Kmeans?

Answer:

We know that Kmeans is nothing but the clustering algorithm used to find the groups of data to make the prediction of data.

Q3. What is kmeans classification algorithm?

Answer:

Basically, kmeans comes under the unsupervised category, in another word we can say that the dataset comes without marks and the information is grouped utilizing their inward construction.

Conclusion

In this article, we are trying to explore Scikit Learn Kmeans. We saw the basic ideas of Scikit Learn Kmeans as well as what are the uses, and features of these Scikit Learn Kmeans. Another point from the article is how we can see the basic implementation of Scikit Learn Kmeans.

Recommended Articles

This is a guide to Scikit Learn KMeans. Here we discuss the introduction, how to work scikit learn clustering KMeans? parameters and FAQ. You may also have a look at the following articles to learn more –