Updated April 7, 2023

Definition of PyTorch concatenate

Concatenate is one of the functionalities that is provided by Pytorch. Sometimes in deep learning, we need to combine some sequence of tensors. At that time, we can use Pytorch concatenate functionality as per requirement. Basically concatenate means concatenating the sequence of a tensor by using a given dimension but the main thing is that it must have the same shape or it must be empty except for some dimension or in other words we can say that it merges all tensors that have the same property. Pytorch provides the torch.cat() function to concatenate the tensor. It uses different types of parameters such as tensor, dimension, and out.

Overview of PyTorch concatenate

Concatenates the given arrangement of seq tensors in the given aspect. All tensors should either have a similar shape (besides in the linking aspect) or be empty, dim (int, discretionary) – the aspect over which the tensors are concatenated, tensors (arrangement of Tensors) – any python grouping of tensors of a similar sort. In other words, we can say that PyTorch Concatenate – Use PyTorch feline to link a rundown of PyTorch tensors along a given aspect, PyTorch Concatenate: Concatenate PyTorch Tensors Along A Given Dimension With PyTorch feline, In this video, we need to connect PyTorch tensors along a given aspect. We utilize the PyTorch link capacity and we pass in the rundown of x and y PyTorch Tensors and we will connect across the third aspect.

How to use PyTorch concatenate?

Now let’s see how we can use concatenation in deep learning as follows. We already discussed what is concatenated in the above point. Now let’s see the syntax for concatenates as follows.

torch.cat(specified tensor, specified dimension, *, Out= None)Explanation

In the above syntax, we use the cat() function with different parameters as follows.

- Specified tensor: Specified tensor means sequence of tensors or we can say that any sequence of a tensor with python with the same property. If we have a nonempty tensor then we must have the same shape.

- Specified dimension: Means tensor dimension that is used to concatenate them as per user requirement and it is an optional part of this syntax.

- Out: This is used for the output of tensor and it is an optional part of this syntax.

Concatenate several datasets

Now let’s see how we can concatenate the different datasets in PyTorch as follows.

Concatenate dataset collections are the joining of at least two informational indexes, in a steady progression, into a solitary informational collection. The quantity of perceptions in the new informational index is the amount of the number of perceptions in the first informational collections. The request for perceptions is consecutive. All perceptions from the principal informational collection are trailed by all perceptions from the subsequent informational collection, etc.

In the easiest case, all info information collections contain similar factors. If the informational collections contain various factors, perceptions from one informational collection have missing qualities for factors characterized uniquely in different informational collections. Regardless, the factors in the new informational index are as old as factors in the old informational collections.

Information blending is the most common way of consolidating at least two informational indexes into a solitary informational index. Regularly, this interaction is fundamental when you have crude information put away in various documents, worksheets, or information tables, which you need to break down across the board.

In PyTorch, is it hypothetically conceivable to ‘consolidate’ different models into one model – viably joining every one of the information adapted up until now? The models are by and large indistinguishable, nonetheless, are prepared with various pieces of the preparation information.

Provided that this is true, would it be feasible to part a dataset into two halves and convey preparing between numerous PCs likewise to folding at home? Would the new model be just about as great as though it was not conveyed?

Pytorch concatenate Examples

Now let’s see different examples of concatenate in PyTorch for better understanding as follows.

Example #1

Code:

import numpy as np

tensor1 = np.array([1, 2, 3])

tensor2 = np.array([4, 5, 6])

tensor3 = np.array([7, 8, 9])

out=np.concatenate(

(tensor1, tensor2, tensor3), axis = 0

)

print(out)Explanation

In the above example first, we need to import the NumPy as shown. After that, we declared three different tensor arrays that are tensor1, tensor2, and tensor3. After the declaration of the array, we use the concatenate function to merge all three tensors. The final result of the above program we illustrated by using the following screenshot as follows.

Now let’s see another example as follows.

Example #2

Code:

import torch

from torch import tensor

X = torch.tensor([5, 5, 5])

Y = torch.tensor([6, 6, 6])

XY = torch.cat((X, Y), 0)

YX = torch.cat((Y, X), 0)

print('The tensor of XY After Concatenation:', XY)

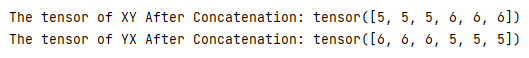

print('The tensor of YX After Concatenation:', YX)Explanation

In the above example, we try to implement the concatenate function, here first we import the torch package. After that, we declared two tensors XY and YX as shown. In this example, we use a torch.cat() function and here we declared dimension as 0. The final result of the above program we illustrated by using the following screenshot as follows.

Now let’s suppose we need to merge the three different datasets at that time we can use the following example as follows.

Example #3

Code:

import torch

from torch import tensor

X = torch.tensor([5, 5, 5])

Y = torch.tensor([6, 6, 6])

Z = torch.tensor([7, 7, 7])

XY = torch.cat((X, Y), 0)

YX = torch.cat((Y, X), 0)

XZ = torch.cat((X, Z), 0)

print('The tensor of XY After Concatenation:', XY)

print('The tensor of YX After Concatenation:', YX)

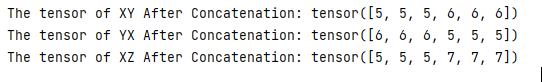

print('The tensor of XZ After Concatenation:', XZ)Explanation

In the above example, we try to concatenate the three datasets as shown, here we just added the third dataset or tensor as shown. The remaining all things are the same as the previous example. The final result of the above program we illustrated by using the following screenshot as follows.

Conclusion

We hope from this article you learn more about the Pytorch Concatenate. From the above article, we have taken in the essential idea of the Pytorch Concatenate and we also see the representation and example of Pytorch Concatenate from this article, we learned how and when we use the Pytorch Concatenate.

Recommended Articles

We hope that this EDUCBA information on “PyTorch concatenate” was beneficial to you. You can view EDUCBA’s recommended articles for more information.