Updated March 8, 2023

Definition of MongoDB Kafka Connector

MongoDB provides the functionality to connect external devices. Now a day’s MongoDB Kafka connector is the best option for modern data architectures. By using the Kafka connector we can easily connect the external systems such as MongoDB. So as per requirement, we can connect the external system into the Kafka topics at the same time we can stream the data from the Kafka topic which means we can transfer the MongoDB data by using Kafka connector. Normally we have Apache Kafka and it is open-source. With the help of an Apache Kafka connector, we can easily send and receive data over the distributed network with high efficiency.

Syntax:

insert | upsert into specified topic collection name select filed one, two, …..N from specified collection name

Explanation

In the above syntax, we use the insert and upsert command as shown; here we need to specify the topic collection name. After that, we need to select the different field names as per our requirement from the Kafka topic as shown.

How Kafka connector works in MongoDB?

Now let’s see how the Kafka connector works in MongoDB as follows. The MongoDB Kafka Connect incorporation gives two connectors: Source and Sink.

- Source Connector: It pulls information from a MongoDB assortment (that goes about as a source) and thinks of them to Kafka subject

- Sink connector: It is utilized to deal with the information in Kafka topic(s), continue them to another MongoDB assortment (that is going about as a sink)

These connectors can be utilized freely too, yet in this blog, we will utilize them together to fasten the start to finish arrangement.

Basically, there are two modes as follows.

1. Insert Mode:

Kafka right now can give precisely once conveyance semantics, any way to guarantee no mistakes are delivered if one of a kind imperatives have been carried out on the objective tables, the sink can run in UPSERT mode. Assuming the mistaken strategy has been set to NOOP, the Sink will dispose of the blunder and keep on handling, be that as it may, it as of now makes no endeavor to recognize infringement of honesty limitations from different exemptions like projecting issues

2. Upsert Mode:

The connector upholds Kudu upserts which replaces the current line if a match is found on the essential keys. In case records are conveyed with the very field or gathering of fields that are utilized as the essential key on the objective table, yet various qualities, the current record in the objective table will be refreshed.

Now let’s see some other important components as follows.

TLS/SSL:

TLS/SSL supported by setting? ssl=true in the connect.mongo.connection choice. The MongoDB driver will then, at that point load endeavor to stack the trust store and Keystore utilizing the JVM framework properties.

You need to set JVM framework properties to guarantee that the customer can approve the SSL authentication introduced by the worker:

- javax.net.ssl.trustStore: the way to a trust store containing the testament of the marking authority.

- javax.net.ssl.trustStorePassword: the secret key to get to this trust store

- javax.net.ssl.keyStore: the way to a key store containing the customer’s SSL declarations

- javax.net.ssl.keyStorePassword: the secret key to get to this key store

Authentication Properties:

The instrument can either be set in the association string yet this requires the secret phrase to be in plain content in the association string or through the connect.mongo.auth.mechanism choice.

On the off chance that the username is set it supersedes the username/secret phrase set in the association string and the connect.mongo.auth.mechanism has priority.

JSON:

Rundown of fields that ought to be changed over to ISODate in Mongodb inclusion (comma-isolated field names). For JSON subjects as it were. Field esteems might be an indispensable age time or an ISO8601 DateTime string with an (offset or ‘Z’ required). In the event that string doesn’t parse to ISO, it will be composed as a string all things considered.

Subdocument fields can be alluded to as in the accompanying models:

topLevelFieldName

topLevelSubDocument.FieldName

topLevelParent.subDocument.subDocument2.FieldName

On the off chance that a field is changed over to ISODate and that equivalent field is named as a PK, then, at that point, the PK field is likewise composed as an ISODate.

This is controlled by means of the connect. mongo.json_datetime_fields choice.

Now let’s see some important key features of Kafka as follows.

- Scalability: As per our requirement we can easily scale the Kafka without any time.

- Data Transformation: It provides the KSQL for data transformation.

- Fault-Tolerant: Kafka utilizes intermediaries to duplicate information and perseveres the information to make it a deficient lenient framework.

- Security: Kafka can be joined with different safety efforts like Kerberos to stream information safely.

- Execution: Kafka is conveyed, apportioned, and has exceptionally high throughput for distributing and buying into the messages.

Examples

Now let’s see the different examples of Kafka connectors in MongoDB for better understanding as follows.

First, we need to create a new collection, here we use the already created collection name as shop_mart.

After that, we need to connect the collection by using the following statement as follows.

localhost: specified port number- t specified db name. specified collection name-c

Explanation:

By using the above syntax we can connect to the collection. Here we need to specify the localhost, port number, db name, and collection name as shown.

Suppose we need to update the quantity of laptops, so at that time we can use the following statement as follows.

db.shop_mart.insert({“ABC”:1, “item name”: “laptop”, “quantity”:20})

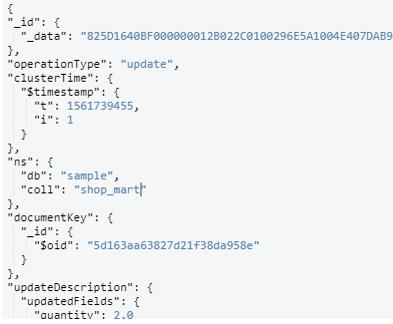

Now suppose the backend of shop_mart is updated with the quantity of laptops as follows.

db.shop_mart.updateOne({“ABC”:1}, {$set:{“quantity”:4} } )

Explanation

After the execution of the above statement, the Kafka topic will be updated. Final output of the above statement we illustrated by using the following screenshot as follows.

So in this way, we can implement the Kafka connector in MongoDB and we can perform the insert and upsert mode as per our requirement.

Conclusion

We hope from this article you learn more about the MongoDB Kafka connector. From the above article, we have learned the basic syntax of the Kafka connector and we also see different examples of the Kafka connector. From this article, we learned how and when we use the MongoDB Kafka connector.

Recommended Articles

This is a guide to MongoDB Kafka Connector. Here we discuss the definition, syntax, how the Kafka connector works in MongoDB with Examples. You may also have a look at the following articles to learn more –