Introduction

Are you interested in boosting your data skills? We have got some awesome feature engineering tools that work like magic to turn raw data into powerful features for better machine learning. Let’s explore the top five tools together! First, we have Pandas, a great tool for manipulating data. Then, there is Scikit-learn, which can add a touch of machine learning magic to your data. Each tool has special powers, making it easy for beginners and experienced data wizards to create great features. It is a revolution in how we approach data and the successful delivery of Your Products.

What is Feature Engineering?

Feature engineering is about making the data more useful by selecting, transforming, or creating new features. This process helps improve the accuracy and performance of machine learning models. Instead of using raw data, feature engineering focuses on finding the most important parts and creating new ones. It’s all about giving the computer the right tools to understand and work with data, making it smarter and more effective in solving problems or making predictions.

This concept finds relevance across various domains, including mechanical engineering. By employing feature engineering principles in the realm of mechanical engineering, one can streamline data for improved analysis and decision-making, ensuring that the computer, equipped with the right tools, becomes more adept at problem-solving and prediction in engineering scenarios.

Types of Feature Engineering

Here are some simple types of feature engineering:

- Imputation: Guessing missing values in data by looking at nearby values, like filling in a missing piece of a puzzle.

- Encoding: Turning categories (like types of fruits) into numbers so that the computer can understand them, like translating different languages into one common language.

- Scaling: Adjusting numbers to be on a similar scale making it easier for the computer to compare them. It’s like converting temperatures from Fahrenheit to Celsius.

- Normalization: Restructuring numbers to fit within a standard range, like putting different currencies on the same scale.

- Aggregation: Summarizing and grouping data to find patterns. It’s like summarizing a long story into a few key points for better understanding.

- Polynomial Features: Creating new features by combining existing ones using math. It’s like mixing ingredients to create a new recipe.

- One-Hot Encoding: Turning categories into binary columns. It’s like sorting objects into different labeled boxes.

These techniques help models understand data better, making predictions more accurate. It ultimately improves the overall performance of machine learning models.

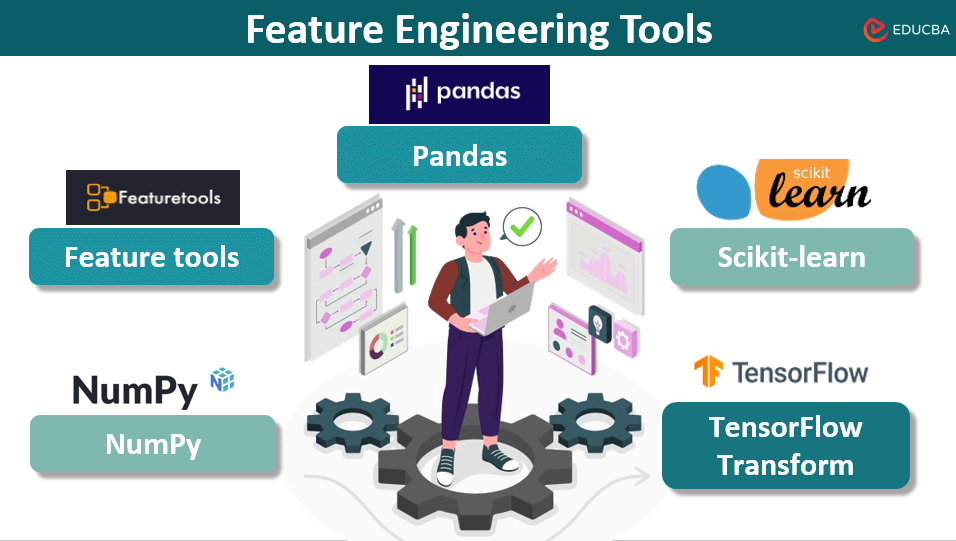

Top 5 Feature Engineering Tools

There are numerous feature engineering tools. Each tool has its strengths and specific functionalities that relate to various aspects of feature engineering. The top 5 tools are as follows:

- Pandas: This is a handy tool in Python for working with data. It helps clean up, organize, and analyze data easily. It is great for handling datasets and performing various operations.

- Scikit-learn: It is another Python tool widely used for machine learning. It is not just for building models but also offers tools to prepare data, like selecting and transforming features. It is easy to use, especially for beginners.

- Featuretools: This tool is a specialized helper for automatically creating new features from different data types. It is good at saving time by figuring out relationships between different pieces of data.

- NumPy: This is a basic tool in Python for doing math with data. It is fast and efficient when working with numbers. Useful for tasks involving numerical data.

- TensorFlow Transform: It is part of the TensorFlow family. This tool helps get data ready for machine learning models. It is especially useful if you use TensorFlow for your machine learning, helping to transform data on a larger scale.

Importance of Feature Engineering

Feature engineering is crucial in the field of machine learning for several reasons:

- Better Model Performance: Feature engineering helps models understand data better. It makes models better at predicting, solving problems, or making decisions accurately.

- Reduced Overfitting: Models can get confused if they only learn from specific data. Feature engineering prevents this by refining data and helping models learn important patterns without getting stuck on minor details.

- Improved Accuracy: Creating better features makes models more accurate. It is like a chef adding the perfect spices to a dish. These new features improve the precision of the model’s predictions or decisions.

- Enhanced Interpretability: Feature engineering helps people understand how models work. Creating understandable features is like telling a story explaining why the model makes certain predictions or decisions.

- Faster Computation: Well-engineered features can make models faster. By focusing on the most important parts, models don’t waste time on unnecessary data, making them quicker and more efficient.

Final Thoughts

Feature engineering is the key to unlocking machine learning models’ true potential. The top tools like Pandas, Scikit-learn, Feature tools, NumPy, and TensorFlow Transform empower data enthusiasts to carve complex features for optimal model performance. From imputation to polynomial features, each technique contributes to better model understanding, reduced overfitting, heightened accuracy, improved interpretability, and faster computation. Embracing these tools and techniques is not just a choice; it’s a revolution in unleashing the true potential of data, ensuring machine learning success, and driving innovation in our data-driven world.

Recommended Articles

If you found our article on feature engineering helpful, you can also visit the following recommendations.