Updated March 18, 2023

What is the Boosting Algorithm?

Boosting algorithms are the special algorithms that are used to augment the existing result of the data model and help to fix the errors. They use the concept of the weak learner and strong learner conversation through the weighted average values and higher votes values for prediction. These algorithms uses decision stamp, margin maximizing classification for processing. There are three types of Algorithms available such as AdaBoost or Adaptive boosting Algorithm, Gradient, and XG Boosting algorithm. These are the machine learning algorithms that follow the process of training for predicting and fine-tuning the result.

Example:

Let’s understand this concept with the help of the following example. Let’s take the example of the email. How will you recognize your email, whether it is spam or not? You can recognize it by the following conditions:

- If an email contains lots of source like that means it is spam.

- If an email contains only one file image, then it is spam.

- If an email contains the message of “You Own a lottery of $xxxxx”, that means it is spam.

- If an email contains some known source, then it is not spam.

- If it contains the official domain like educba.com, etc., that means it is not spam.

The above-mentioned rules are not that powerful to recognize the spam or not; hence these rules are called as weak learners.

To convert weak learner to strong learner, combine the prediction of the weak learner using the following methods.

- Using average or weighted average.

- Consider prediction has a higher vote.

Consider the above-mentioned 5 rules; there are 3 votes for spam and 2 votes for not spam. Since there is high vote spam, we consider it as spam.

How Boosting Algorithms Works?

Boosting Algorithms combines each weak learner to create one strong prediction rule. To identify the weak rule, there is a base Learning algorithm (Machine Learning). Whenever the Base algorithm is applied, it creates new prediction rules using the iteration process. After some iteration, it combines all weak rules to create one single prediction rule.

To choose the right distribution follows the below-mentioned steps:

Step 1: The base Learning algorithm combines each distribution and applies equal weight to each distribution.

Step 2: If any prediction occurs during the first base learning algorithm, then we pay high attention to that prediction error.

Step 3: Repeat step 2 until the limit of the Base Learning algorithm has been reached or high accuracy.

Step 4: Finally, it combines all the weak learner to create one strong prediction tule.

Types of Boosting Algorithm

Boosting algorithms uses different engines such as decision stamp, margin maximizing classification algorithm, etc. There are three types of Boosting Algorithms which are as follows:

- AdaBoost (Adaptive Boosting) algorithm

- Gradient Boosting algorithm

- XG Boost algorithm

AdaBoost (Adaptive Boosting) Algorithm

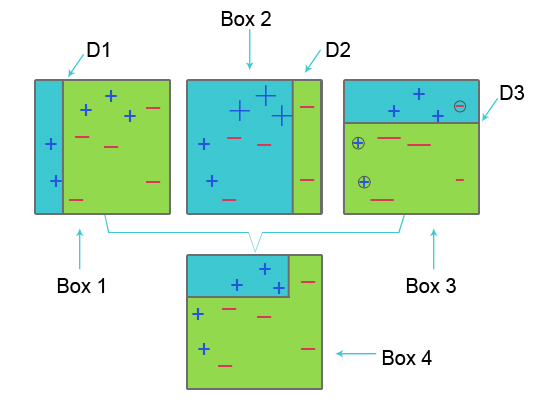

To understand AdaBoost, please refer to the below image:

Box 1: In Box 1, for each data set, we assigned equal weights and to classify plus (+) and minus (-) sign, we apply decision stump D1, which creates a vertical line at the left-hand side of box 1. This line incorrectly predicted three-plus sign(+) as minus(-); hence we apply higher weights to these plus sign and apply another decision stump.

Box 2: In Box 2, the size of three incorrectly predicted plus signs (+) becomes bigger as compared to another. The second decision stump D2 at the right-hand side of the block predict this incorrectly predicted plus sign (+) sign as correct. But since misclassification error occurred because of the unequal weight with a minus sign (-), we assign a higher weight to a minus sign (-) and apply another decision stump.

Box 3: In Box three, because of misclassification error, three minus sign (-) has a high weight. here decision stump D3 is applied to predict this misclassification and correct it. This time to classify plus (+) and minus (-) sign horizontal line is created.

Box 4: In Box 4, decision stump D1, D2 and D3 are combined to create a new strong prediction.

Adaptive Boosting works are similar to those mentioned above. It combines the group of weak learner base on weight age to create a strong learner. In the first iteration, it gives equal weight to each data set and the starts predicting that data set. If incorrect prediction occurs, it gives high weight to that observation. Adaptive Boosting repeat this procedure in the next iteration phase and continue until the accuracy has been achieved. Then combines this to create a strong prediction.

Gradient Boosting Algorithm

Gradient boosting algorithm is a machine learning technique to define loss function and reduce it. It is used to solve problems of classification using prediction models. It involves the following steps:

1. Loss Function

The use of the loss function depends on the type of problem. The advantage of gradient boosting is that there is no need for a new boosting algorithm for each loss function.

2. Weak Learner

In gradient boosting, decision trees are used as a weak learner. A regression tree is used to give true values, which can be combined together to create correct predictions. Like in the AdaBoost algorithm, small trees with a single split are used, i.e. decision stump. Larger trees are used for large levels I,e 4-8 levels.

3. Additive Model

In this model, trees are added one at a time. existing trees remains the same. During the addition of trees, gradient descent is used to minimize the loss function.

XG Boost

XG Boost is short for Extreme Gradient Boosting. XG Boost is an upgraded implementation of the Gradient Boosting Algorithm, which is developed for high computational speed, scalability, and better performance.

XG Boost has various features, which are as follows:

- Parallel Processing: XG Boost provides Parallel Processing for tree construction which uses CPU cores while training.

- Cross-Validation: XG Boost enables users to run cross-validation of the boosting process at each iteration, making it easy to get the exact optimum number of boosting iterations in one run.

- Cache Optimization: It provides Cache Optimization of the algorithms for higher execution speed.

- Distributed Computing: For training large models, XG Boost allows Distributed Computing.

Recommended Articles

In this article, we have seen what is Boosting Algorithm is, Various Types of Boosting Algorithm in machine learning and their working. You can also go through our other suggested articles to learn more –