Updated April 5, 2023

Introduction to PyTorch SGD

In PyTorch, we can implement the different optimization algorithms. The most common technique we know that and more methods used to optimize the objective for effective implementation of an algorithm that we call SGD is stochastic gradient descent. In other words, we can say that it is a class of optimization algorithms. It very well may be viewed as a stochastic guess of angle plunge enhancement since it replaces the genuine slope (determined from the whole informational collection) by a gauge thereof (determined from an arbitrarily chosen subset of the information). This decreases the computational weight, particularly in high-dimensional streamlining issues, accomplishing quicker emphases in exchange for a lower intermingling rate.

What is PyTorch SGD?

First, how about we talk about what you mean by upgrading a model. We just need the model to arrive at the condition of greatest precision is given asset requirements like time, processing power, memory, and so on. Streamlining has an expansive extension, and you can likewise change the engineering to improve the model. However, that is something that accompanies instinct created by the experience.

The SGD is only Stochastic Gradient Descent; It is an analyzer that goes under angle plunge, which is a renowned enhancement procedure utilized in AI and profound learning. The SGD enhancer where “stochastic” signifies a framework that is associated or connected up with irregular likelihood. In the SGD analyzer, a couple of tests are being gotten, or we can say a couple of tests are being chosen in an arbitrary way rather than taking up the entire dataset for every cycle. We will utilize torch.optim, which is a bundle, executes various improvement calculations for improving a capacity. The few usually utilized strategies are as of now upheld, and the interface is general enough with the goal that more useful ones can be likewise effortlessly incorporated in the future.

Stochastic Gradient Descent is amazingly fundamental and is only occasionally utilized at this point. One issue is with the overall learning rate identified with the same. Henceforth it doesn’t function admirably when the boundaries are in a few scales since an espresso learning rate will make the preparation slow, while an outsized learning rate may cause motions. Likewise, Stochastic Gradient Descent, for the most part, struggles getting away from the seat focuses. Adagrad, Adadelta, RMSprop, and ADAM, for the most part, handle saddle focus better. SGD with force delivers some speed to the improvement and furthermore helps get away from neighborhood minima better.

Using PyTorch SGD

The word ‘stochastic’ signifies a framework or a cycle that is connected with an irregular likelihood. Consequently, in Stochastic Gradient Descent, a couple of tests are chosen arbitrarily rather than the entire informational collection for every cycle. In Gradient Descent, there is a term called “clump,” which indicates the complete number of tests from a dataset utilized to ascertain the inclination for every cycle. In run-of-the-mill Gradient Descent advancement, the group is taken to be the entire dataset, similar to Batch Gradient Descent. In spite of the fact that utilizing the entire dataset is truly helpful for getting to the minima in a less loud and less irregular way, the issue emerges when our datasets get huge.

Implementation PyTorch SGD

Now let’s see how we can implement the SGD in PyTorch as follows.

Syntax

torch.optim.SGD(specified parameters, lrv=<specified required parameters>, mf=0, dm = 0, w =0, nm= false)Explanation

Using the above syntax, we can implement the SGD optimization algorithm as per our requirement; here, we use different parameters.

- specified parameters: Specified parameter means iterable parameters that are used to define the distinct group of parameters.

- lrv: lrv is nothing but the learning rate value of the optimized algorithm.

- mf: This is an afloat and optional part of this syntax, and mf means momentum factor. The default value of mf is 0.

- dm: This is also a float and optional part of the syntax. The default value of dampening is 0.

- nm: This is a Boolean and optional part of the syntax, and Nesterov momentum’s default value is false.

Ordinarily, we would see that while preparing the model, misfortune diminishes promptly in the beginning, yet steadily you arrive at a moment that it appears you’re not gaining any headway whatsoever.

The streamlining agent’s old foe, obsessive ebb, and flow could be the issue. The neurotic curve is, basically, distinct, which isn’t scaled as expected. The scenes are regularly portrayed as valleys, channels, waterways, and gorges. The repeats either bounce between valleys or approach the ideal in little, bashful advances. Progress along specific headings come to a standstill. In these heartbreaking locales, slope plummet bobbles. Here utilizing momentum acts the hero. The right worth of force is gotten by cross approval, and I would try not to stall out in a neighborhood minimum.

PyTorch SGD Examples

Now let’s see different examples of SGD in PyTorch for better understanding as follows.

First, we need to import the library that we require as follows.

import torchAfter that, we need to define the different parameters that we want as follows.

btch, dm_i, dm_h, dm_o = 74, 900, 90, 12Here we define the different parameters as shown in the above code; here, btch means batch size, dm_i means input dimension, dm_o output dimension, and h for hidden.

Now create a random tensor by using the following code as follows.

input_a = torch.randn(btch, dm_i)

result_b = torch.randn(btch, dm_o)Now we need to define the model with loss function as follows.

SGD_model = torch.nn.Sequential(

torch.nn.Linear(dm_i, dm_h),

torch.nn.ReLU(),

torch.nn.Linear(dm_h, dm_o),

)

l_fun = torch.nn.MSELoss(reduction='sum')In the next line, we need to define the learning rate value as per our requirements.

r_l = 0.2In the next step, we need to initialize the optimizer we want with a forwarding pass.

optm = torch.optim.SGD(SGD_model.parameters(), lr=r_l, momentum=0.9)

for values in range(600):

p_y = SGD_model(input_a)

loss = l_fun(p_y, result_b)

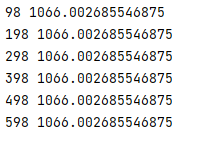

if values % 100 == 98:

print(values, loss.item())We illustrated the final output of the above code by using the following screenshot as follows.

Conclusion

We hope from this article you learn more about the PyTorch SGD. From the above article, we have taken in the essential idea of the PyTorch SGD, and we also see the representation and example of PyTorch SGD. Furthermore, from this article, we learned how and when we sequential SGD.

Recommended Articles

We hope that this EDUCBA information on “PyTorch SGD” was beneficial to you. You can view EDUCBA’s recommended articles for more information.