What is Hyperparameter Tuning?

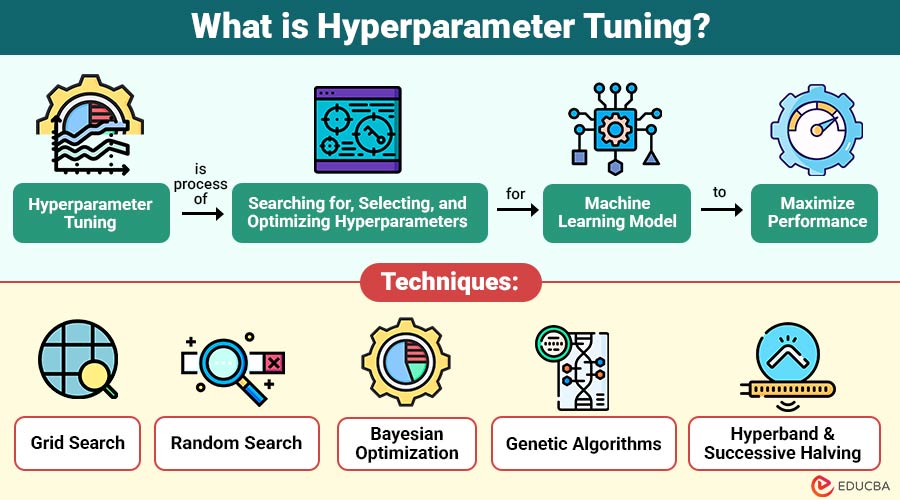

Hyperparameter Tuning is process of searching for, selecting, and optimizing hyperparameters for a machine learning model to maximize performance. Hyperparameters are external configuration values that guide the training process and cannot be learned automatically.

Table of Contents:

Key Takeaways:

- Hyperparameter tuning optimizes model hyperparameters to improve predictive accuracy and overall machine learning performance.

- Proper tuning balances complexity, reducing overfitting and underfitting, and improving generalization to unseen datasets.

- Automated and manual tuning techniques, including grid search and Bayesian optimization, accelerate model improvement.

- Effective hyperparameter tuning stabilizes model training, preventing divergence and ensuring consistent, reliable predictions.

Why is Hyperparameter Tuning Important?

Here are the key reasons why hyperparameter tuning is important for building effective machine learning models:

1. Maximizes Model Accuracy

Choosing the right hyperparameters allows the model to learn patterns and achieve optimal predictive performance efficiently.

2. Improves Generalization

Proper tuning balances model complexity to prevent overfitting or underfitting and ensures reliable performance on unseen data.

3. Speeds Up Training

Learning rate, batch size, and optimizer choices influence convergence speed, making training faster and more efficient.

4. Reduces Model Instability

Incorrect hyperparameters may cause divergence or inconsistent results; tuning stabilizes training and improves prediction reliability.

5. Enables Efficient Resource Use

Effective tuning optimizes computational resources, minimizing wasted time and cost on poorly performing model configurations.

Hyperparameter Tuning Techniques

Hyperparameter tuning can be manual or automated. Below are the most widely used techniques:

1. Grid Search

Systematically assesses every possible combination of predefined hyperparameter values to quickly identify the optimal model configuration.

Pros:

- Comprehensive exploration of all possible hyperparameter combinations.

- Ensures optimal parameters when the grid is exhaustive.

Cons:

- Extremely computationally expensive for large parameter spaces.

- Slow and impractical for high-dimensional hyperparameter searches.

2. Random Search

Selects random hyperparameter combinations to evaluate, offering faster results than exhaustive grid search approaches.

Pros:

- Faster than grid search in high-dimensional spaces.

- Often surprisingly effective for complex hyperparameter landscapes.

Cons:

- No guarantee of finding globally optimal parameters.

- Can miss critical hyperparameter interactions due to randomness.

3. Bayesian Optimization

Uses probabilistic surrogate models to predict and select promising hyperparameter combinations intelligently.

Pros:

- Efficient exploration reduces the number of evaluations needed.

- Smart selection focuses on the most promising hyperparameter regions.

Cons:

- Computational overhead can be significant for complex models.

- Implementation is more difficult compared to simpler methods.

4. Genetic Algorithms

Inspired by evolution, it evolves hyperparameter sets using selection, crossover, and mutation.

Pros:

- Can effectively handle complex, nonlinear hyperparameter landscapes.

- Flexible; works well with discrete or continuous hyperparameters.

Cons:

- Convergence can be slow, requiring many iterations.

- Requires many runs to achieve satisfactory performance.

5. Hyperband & Successive Halving

Efficiently allocates resources by iteratively eliminating poor hyperparameter configurations early.

Pros:

- Very fast due to early stopping of poor configurations.

- Resource-efficient, reduces unnecessary computations.

Cons:

- Performance is sensitive to initial configuration ranges.

- May discard configurations that improve later in training.

Hyperparameter Tuning Strategies

To get the most out of your tuning process, adopt the following strategies:

1. Narrow the Search Range

Limit hyperparameter values to reasonable ranges based on prior knowledge, starting from sensible default settings.

2. Use Coarse-to-Fine Tuning

Begin with broad hyperparameter values, then iteratively refine around promising regions for more precise optimization.

3. Tune One Parameter at a Time

Adjust a single hyperparameter while keeping the others fixed; this is effective when parameter interactions are minimal.

4. Parallel and Distributed Tuning

Run multiple hyperparameter experiments concurrently across processors or machines to accelerate tuning.

5. Use Learning Curves

Track training and validation performance over time to identify overfitting or underfitting and guide hyperparameter adjustments.

Hyperparameter Tuning Tools

Many tools simplify the tuning process:

| Tool | Purpose/Key Feature |

| Scikit-Learn (GridSearchCV, RandomizedSearchCV) | Systematic or random search of hyperparameters for ML models. |

| Keras/TensorFlow (Keras Tuner, TensorBoard HPARAMS) | Automated tuning and visualization for neural networks. |

| Ray Tune | Distributed, large-scale hyperparameter tuning with parallel execution. |

| Optuna | Fast, flexible optimization using Bayesian and advanced algorithms. |

| Hyperopt | Bayesian optimization with Tree-structured Parzen Estimator for complex searches. |

| Weights & Biases / MLflow | Experiment tracking, logging, and comparing hyperparameter tuning results. |

Challenges of Hyperparameter Tuning

Despite its advantages, tuning presents several challenges:

1. High Computational Cost

Tuning deep learning models or large datasets requires significant computational resources, making the process expensive and slow.

2. Large Search Spaces

Having too many hyperparameters creates too many possible combinations, making the tuning process very long and complicated.

3. Time-Consuming

Even efficient tuning strategies often require hours or days to thoroughly explore promising hyperparameter combinations.

4. Risk of Overfitting to Validation Data

Excessive hyperparameter tuning can bias models toward validation performance, reducing generalization on unseen test data.

5. Parameter Interaction

Interdependent hyperparameters complicate tuning because changes in one parameter affect the optimal values of others.

Real-World Examples

Here are some real-world examples of how hyperparameter tuning is applied across industries:

1. Google

Uses large-scale Bayesian optimization to improve neural network training efficiency.

2. Amazon

Uses tuning to optimize demand forecasting and personalized product recommendations.

3. Self-Driving Cars

Optimize parameters in perception, prediction, and motion planning models.

Final Thoughts

Hyperparameter Tuning is important step in building high-performing machine learning systems. While it can be time-consuming and computationally heavy, the improvements in accuracy, stability, and generalization make it worthwhile. Whether you are working with classical ML algorithms or advanced deep learning models, effective tuning transforms mediocre performance into excellence. By using structured methods, automation tools, and best practices, data scientists can achieve optimal outcomes with minimal trial-and-error.

Frequently Asked Questions (FAQs)

Q1. What are hyperparameters?

Answer: Hyperparameters are external configuration values that control how a model trains, such as learning rate, tree depth, or batch size.

Q2. Which is better: Grid Search or Random Search?

Answer: Random search is usually faster and more effective for large search spaces.

Q3. Does tuning cause overfitting?

Answer: Excessive tuning can overfit the validation set, but cross-validation helps prevent it.

Q4. Is hyperparameter tuning necessary for every model?

Answer: Simple models may perform well with default values, but tuning always improves performance for more complex models.

Recommended Articles

We hope that this EDUCBA information on “Hyperparameter Tuning” was beneficial to you. You can view EDUCBA’s recommended articles for more information.