Updated November 20, 2023

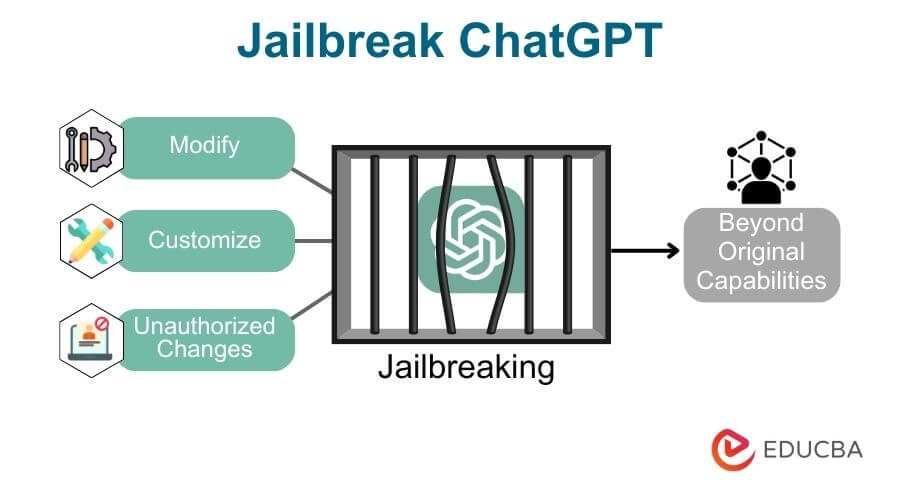

Introduction to Jailbreak ChatGPT

Jailbreak ChatGPT involves modifying the AI model to expand its capabilities beyond its original design. This can offer customization and creativity, but it also raises ethical and practical considerations; understanding the process and its implications is crucial for responsible and innovative AI development.

It takes an in-depth understanding of AI technology, ethics, and a responsible approach to ensure that the modified model stays useful and complies with ethical standards.

Table of Contents

- Introduction to Jailbreak ChatGPT

- Understand Jailbreak in ChatGPT

- Enable Developer Mode

- Crafting Creative Prompts

- Explore AI Limitations

- Responsible Usage

- User Experiences and Communities

Key Takeaways

- Jailbreak AI models involve modifying them beyond their original design for customization and creativity.

- Ethical considerations include safety, bias mitigation, transparency, and accountability.

- Responsible use of jailbroken AI models is essential to prevent harm and ensure ethical compliance.

- Responsible AI usage and customization contribute to a collaborative and informed user community.

- Online communities provide platforms for sharing user experiences and insights related to AI models.

Understand Jailbreak in ChatGPT (AI)

In AI models like ChatGPT, jailbreak refers to modifying or customizing an AI model beyond its original intended use or capabilities. It involves making unauthorized changes or alterations to the model’s architecture, parameters, or functionality to extend its capabilities or adapt it to specific tasks not part of its initial design.

Motivations for jailbreaking AI models and potential uses can vary, but they often revolve around the desire to enhance, expand, or adapt the model for specific purposes.

What is jailbreak in the context of AI models like ChatGPT?

In AI models like ChatGPT, jailbreak typically refers to modifying or altering the pre-trained model or its associated software to enable unauthorized or unsanctioned changes, improvements, or extensions to its functionality. It is similar to jailbreaking in the context of mobile devices like smartphones but applied to AI models.

The term “jailbreaking” implies that the AI model is being set free from certain limitations or constraints imposed by its creators, allowing users to make changes that the developers did not originally intend.

Jailbreaking an AI model is often done to customize, enhance, or adapt it for particular applications, research, or innovative use cases. However, it can also raise ethical and legal considerations, as unauthorized modifications may lead to unintended consequences or misuse if not done responsibly and within ethical boundaries.

Motivations and Potential Uses

Motivations for jailbreaking AI and potential uses can be diverse, and they often stem from a desire to expand, customize, or adapt AI models for various specific purposes. Some motivations and potential uses for jailbreaking AI:

- Customization: Jailbreaking allows users to customize AI models to meet their needs and preferences.

- Enhancing Capabilities: Users may jailbreak AI models to enhance their capabilities. For example, extending a language model like ChatGPT to generate code, answer domain-specific questions, or translate between specialized languages.

- Research and Innovation: Researchers and developers often jailbreak AI models to gain deeper insights into their architecture and to experiment with novel techniques.

- Ethical and Safety Improvements: Jailbreaking can introduce ethical constraints and safety measures into AI models to make them more responsible and less prone to harmful or biased behavior.

- Privacy and Security: Jailbreaking can also improve privacy and security by modifying models to be more privacy-conscious, ensuring that sensitive user data is handled responsibly and securely.

While jailbreaking AI models can open up many possibilities, it’s crucial to consider the ethical and legal implications.

Enable Developer Mode

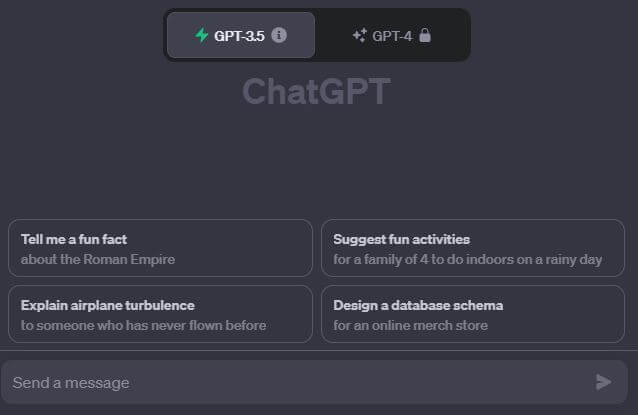

OpenAI didn’t offer a specific “Developer Mode” for their ChatGPT API. Instead, OpenAI provides access to ChatGPT and similar models through their API, which allows developers to make API requests to interact with the model.

Developers could send text prompts to the API and receive responses generated by the model based on those prompts.

Since the field of AI and technology can evolve rapidly, OpenAI may have introduced new features or modes, such as a “Developer Mode,” to explore the availability of any new features or modes and to get the most current information regarding the ChatGPT API, we recommend you visiting OpenAI’s official website, documentation, or developer resources. They should provide details on any additional tools, features, or customization options that may have been introduced.

Steps to enable Developer Mode if it’s an option.

OpenAI did not provide a specific “Developer Mode” for ChatGPT or GPT-3. Instead, they offered access to their models through APIs, allowing developers to interact with the models using API calls.

Crafting Creative Prompts

When using AI language models like GPT-3 or ChatGPT, crafting creative prompts is an art. The quality and creativity of your prompts can significantly impact the responses you receive. Let us discuss some tips for crafting creative prompts:

1. Be Clear and Specific: Ask chatGPT Questions in a clear and specific language.

For example,

2. Employ Clear Language: Using words, clearly describe the situation. Use descriptive language that conveys your desired atmosphere, emotions, or details in the response. The more specific and colorful your language, the more creative the response can be.

3. Set a Tone or Style: Specify the tone or style you want in the response. For instance, you can ask for something humorous, poetic, suspenseful, or in the style of a particular author or genre.

4. Think Outside the Box: Push the boundaries of creativity by introducing unconventional elements or concepts. For example, “Write a dialogue between a lion and a forest.”

5. Ask for Alternate Realities: Invite the model to create alternative or fictional scenarios. For example, “Describe a world where trees grow upside down.”

6. Use Analogies or Comparisons: Encourage using analogies or comparisons to convey abstract concepts. For example, “Explain the concept of happiness as if it were a rare gemstone.”

7. Pose Ethical Dilemmas or Philosophical Questions: You can challenge the model with ethical dilemmas, philosophical questions, or thought experiments. For example, “Discuss the implications of a world where everyone is a robot”.

8. Combine Unrelated Elements: you can combine or mix unrelated concepts or objects to spark creativity. For example, “Write a poem that combines the idea of a thunderstorm with a cup of tea.”

9. Provide Constraints: Sometimes, creativity thrives within constraints. You can limit the word to put constraint.

10. Experiment and Improve: Don’t be scared to experiment and improve your prompts. If you don’t get the desired response, try rephrasing or adjusting your request until you get the creative output you want.

11. Experiment and Explore: Experiment with different prompts, styles, and topics. The more you explore, the more you discover the model’s creative potential.

While AI models like ChatGPT can generate creative responses, the results are generated based on patterns learned from large datasets.

The output quality can vary, so it may take some trial and error to get the desired level of creativity.

Importance of Prompts in Jailbreaking

Prompts play a crucial role in jailbreaking AI models and customizing their behavior. When jailbreaking AI models, you modify or expand their capabilities beyond their original design. Prompts are important in this context for several reasons:

- Defining Desired Output: Prompts allow you to specify the type of output or response you want from the AI model.

- Customization: When jailbreaking AI models, prompts enable you to customize the model’s responses to suit specific use cases or applications.

- Behavior Modification: Prompts can be used to influence the model’s behavior. Whether you want to make the model more polite, assertive, creative, or focused on specific topics, prompts shape how the AI responds.

- Safety and Ethical Constraints: Prompts can include ethical or safety constraints to ensure the AI’s responses adhere to responsible and ethical guidelines.

- Creative Outputs: If you’re interested in generating creative content, prompts can be designed to inspire the model to generate imaginative or artistic responses.

- Problem-Solving and Innovation: Prompts can be used to request problem-solving or innovative ideas.

- Iterative Development: Prompts support the iterative development process.

- Mitigating Bias and Inappropriate Content: Prompts can be designed to minimize bias and the generation of inappropriate or harmful content.

It’s important to note that while prompts are a powerful tool for jailbreaking AI models, they are not a foolproof mechanism. We should review and filter the generated content to ensure it aligns with ethical and safety standards.

Tips on creating prompts that push the AI’s boundaries

Creating prompts that push the boundaries of AI’s capabilities can lead to more creative and innovative responses. Following are some tips on crafting prompts that encourage AI models to generate content beyond their usual limits:

- Use Open-Ended Questions: In chatGPT, Instead of asking for a straightforward answer, pose open-ended questions that require thoughtful and detailed responses.

- Challenge Conventions: We may encourage the AI to challenge conventional wisdom or explore unconventional ideas. For example, “Debate the merits of Artificial intelligence.”

- Introduce Contradictory Elements: Present the AI with contradictory elements or ideas and ask it to reconcile them. This can lead to creative insights.

- Combine Genres or Concepts: you can mix and match different genres, concepts, or themes to create unique scenarios. “

- Set the Scene with Imagery: Begin your prompt with vivid imagery to set the stage for a creative response. For example, “Imagine the two people living in the middle of the sky.”

- Incorporate Multiple Perspectives: Request responses from different perspectives or characters within a scenario. This can add depth and complexity to the AI’s output. “Write a dialog between an IT Developer, A farmer, and a doctor discussing developing rural areas for new job opportunities.”

- Suggest Hypothetical Scenarios: Encourage the AI to explore hypothetical scenarios or alternative realities. For example, “Describe a world where humans can fly in the sky.”

- Ask for Personal Reflections: Invite the AI to share opinions, reflections, or philosophical musings. For example, “Share your thoughts on the nature of consciousness and the existence of free will.”

- Experiment with Literary Techniques: Request specific literary techniques like metaphors, similes, alliteration, or personification to create more vivid and creative language.

- Seek Analogies and Comparisons: Ask the AI to draw analogies or comparisons between unrelated concepts to encourage creative thinking.

- Explore Ethical Dilemmas: Pose complex ethical dilemmas and ask the AI to debate the pros and cons of different choices.

- Limitation or Challenge Prompts: Set limitations or challenges for the AI, such as asking for a response in a specific style, within a word limit, or as a poem or song.

Remember that the AI’s ability to respond to these prompts depends on its training data and underlying algorithms.

Not all attempts may yield groundbreaking results, but persistence and creativity in crafting prompts can lead to remarkable outputs.

Additionally, always review and refine the generated content to ensure it aligns with your intended use and ethical guidelines.

Explore AI Limitations

Understanding an AI’s capabilities and limitations is crucial for using it effectively and responsibly. It helps manage expectations, avoid misuse, and ensure that the AI is applied in scenarios where it can provide meaningful assistance. Let’s see some of the reasons why it’s important:

- Users can set reasonable expectations when they know the AI’s capabilities. Overestimating AI’s capabilities can cause annoyance and disappointment.

- Responsible use is encouraged by being aware of one’s limitations. It shields users from using AI to perform jobs that belong in human hands, like making morally or critically important decisions.

- It is possible to improve quality control by being aware of AI’s limitations. To guarantee accuracy and relevancy, users can check and filter content produced by AI.

- Users can spot potential biases or ethical issues in AI-generated content and take corrective action when they know the limitations.

- The data used to train AI models is a determining factor. By being aware of this, users can better understand why the AI might not be effective in particular situations or areas.

Examples of tasks that may be challenging for AI to perform

- AI is incapable of making moral or ethical decisions. It cannot evaluate the subtleties of moral quandaries or make difficult ethical judgments.

- Medical Diagnosis: AI can help with medical diagnosis but cannot replace skilled medical professionals who can consider a patient’s medical history and other factors.

- Legal Advice: AI is not a substitute for a lawyer’s knowledge or experience, who must consider particular legal circumstances and rules.

- AI can generate creative content but may struggle to produce original art or music that rivals human creativity.

- AI may not accurately interpret or respond to complex human emotions, especially in real-time or high-stress situations.

- AI models might lack common sense reasoning and intuition, making it challenging to answer questions that rely on these abilities.

- AI is typically a text-based or digital entity and cannot perform physical actions or tasks in the real world.

- While AI chatbots can offer emotional support to some extent, they cannot provide personalized, empathetic support as well as humans.

- AI models may struggle to deeply understand complex or nuanced contexts in text, which can lead to inaccuracies in interpretation.

- AI models may sometimes have difficulty maintaining dynamic and coherent conversations, especially when handling multiple rounds of back-and-forth dialogue.

It’s important to recognize that AI is a tool, not a replacement for human expertise and judgment. Users should be aware of these limitations, use AI in appropriate contexts, and exercise critical thinking when assessing the quality of AI-generated content.

Responsible Usage

Responsible usage of AI models entails ethical behavior, adherence to platform policies, and consideration of potential consequences. It involves safeguarding privacy, mitigating bias, and respecting the impact of AI on society, prioritizing the well-being and rights of individuals while leveraging AI technology for the benefit of all.

Respect platform policies and guidelines

Respecting platform policies and guidelines is fundamental for using AI models, applications, and services responsibly and ethically. Here are some key reasons why it is essential to adhere to these policies:

- Ethical and Responsible Use: Platform policies are often designed to promote the ethical and responsible use of AI and other technologies. They help users and developers understand the boundaries of acceptable behavior.

- Mitigating Harm: These policies are in place to protect users and prevent harmful or abusive uses of AI. Adherence to policies helps mitigate potential harm, such as spreading misinformation, engaging in harassment, or violating privacy.

- Fairness and Bias Mitigation: Platform guidelines can include instructions for avoiding bias in AI-generated content. Following these guidelines helps reduce the risk of biased, discriminatory, or harmful outputs.

- Data Privacy and Security: Many AI platforms have specific data privacy and security policies. Complying with these policies is critical for safeguarding sensitive information and respecting user privacy.

- User Trust: Policy adherence builds trust with users and the broader community. Users are more likely to engage with and trust platforms that demonstrate a commitment to ethical and responsible use.

- Legal Compliance: Non-compliance with platform policies can lead to legal consequences, including lawsuits, fines, or other legal actions. Following policies is essential to avoid legal trouble.

- Platform Access: Violating policies may result in account suspension, termination, or other sanctions, limiting your access to the platform and its services.

- Community Standards: Many AI platforms have guidelines that help maintain a positive and respectful online environment. Respecting these standards contributes to a healthy online community.

- Sustainability and Longevity: By adhering to policies, you support the long-term sustainability of AI platforms. Inappropriate or harmful use can lead to public backlash or regulatory scrutiny, potentially threatening the platform’s existence.

It’s important to carefully read and understand the policies and guidelines of any AI platform or service you use. This understanding will enable you to:

- Use the technology in ways that are consistent with ethical standards.

- Ensure that your usage respects the privacy and security of individuals.

- Prevent the spread of misinformation, hate speech, or other harmful content.

- Avoid violating copyright or intellectual property rights.

- Comply with legal regulations and requirements specific to your region.

Ethical considerations for jailbreaking AI

- Violation of Terms of Service: Jailbreaking often involves circumventing the terms of service or licensing agreements the AI’s developers set. This raises ethical concerns as it consists of breaking contractual agreements.

- Intellectual Property Rights: AI systems are often the result of significant research and development efforts. Unauthorized access or modification may infringe upon intellectual property rights, impacting the incentives for innovation in the field.

- Security Risks: Jailbreaking can introduce security vulnerabilities into the AI system, potentially compromising sensitive data and functionalities. This poses ethical concerns, especially if AI is used in critical applications such as healthcare or finance.

- Fair Use and User Rights: Advocates of jailbreaking argue for the right to modify or customize the AI for personal use. Ethical considerations arise in balancing user rights against potential misuse and the impact on the AI’s intended functionality.

- Unintended Consequences: Modifying AI systems may lead to unintended consequences, affecting the performance and behavior of the system in ways that were not anticipated. This raises ethical concerns about the potential harm caused by such modifications.

- Accountability and Responsibility: Determining accountability for the consequences of jailbreaking becomes challenging. If unauthorized modifications lead to negative outcomes, it may be unclear who bears responsibility, raising ethical questions about accountability in AI development.

- Impact on the AI Ecosystem: Jailbreaking can disrupt the ecosystem surrounding AI technologies. This includes the business models of developers and the stability of AI applications. Ethical considerations arise in assessing the broader impact on stakeholders.

- Regulatory Compliance: Depending on the jurisdiction, jailbreaking may violate laws and regulations governing the use of AI. Ethical considerations include adhering to legal frameworks to ensure responsible and safe AI deployment.

- Potential for Exploitation: Unrestricted modification of AI systems opens the door to potential exploitation, with malicious actors taking advantage of vulnerabilities for malicious purposes. This raises ethical concerns about the broader societal impact of jailbreaking.

User Experiences and Communities

Online communities and forums serve as hubs where users share experiences and insights related to AI models. These platforms enable knowledge exchange, creative discussions, and the exploration of diverse applications, fostering a collaborative and informed AI user community.

Online communities or forums

There are several online communities and forums where users discuss their experiences with AI models, share tips, and exchange ideas.

- Reddit: Subreddits like r/artificial, r/MachineLearning, and r/gpt3 provide spaces for discussions about AI models, including their applications, challenges, and creative uses.

- GitHub: Developers often share their projects and code related to AI models on GitHub. You can find repositories with AI experiments and applications.

- Stack Overflow: Stack Overflow is a valuable resource for technical questions and problem-solving related to AI models. Developers often seek assistance here.

- AI and Machine Learning Forums: There are numerous AI and machine learning forums and websites where users can ask questions, discuss best practices, and share experiences.

- OpenAI Community: OpenAI has its community forum where users discuss their experiences with OpenAI’s models, share use cases, and provide feedback.

- Hacker News: The Hacker News community often discusses AI and emerging technologies.

These communities offer valuable insights, support, and a platform for sharing experiences and ideas related to AI models and technology. Be sure to respect the guidelines and rules of these platforms when participating in discussions and sharing your experiences.

Conclusion

In summary, online forums and communities are essential for promoting conversations and information exchange regarding AI models. They give users a forum to share ideas, opinions, and experiences, fostering a knowledgeable and cooperative AI community.