Generative AI Security in the Cloud: Overview

There is no security concern more elevated in the mind of enterprise users as they grapple with the rapid deployment of generative AI technologies to power innovation, automate business processes, and personalize customer interactions than their risk of exposure to attack. When working with sensitive data, proprietary models, and cloud-native infrastructure, generative AI security in the cloud becomes a strategic urgency.

When protecting your cloud-based generative AI initiatives, it is more than firewalls and checklists, or even fortifying compliance standards. It will require a layered approach, which involves not only how data is accessed but also considering the integrity of the model and the system’s architecture, as well as monitoring potential threats. In this blog, we will provide practical best practices for securing, building with, and deploying generative AI systems that are secure, resilient, and compliant in the cloud. For organizations that contract with leading vendors of Generative AI development services, this framework also provides a basis for considering security-first collaboration.

The Security Landscape of Generative AI in Cloud

Generative AI combined with cloud platforms such as AWS, Azure, or Google Cloud brings massive computational scalability and model deployment velocity. But it also poses several generative AI security risks:

- Data Leaks: Poorly treated training data or prompt logs could reveal private customer, financial, or company information.

- Model Poisoning: Adversaries can inject biased or harmful training data or weights to cause unsafe or untrustworthy outputs.

- Trigger Injection Attacks: AI results are manipulated by attackers by shaping inputs in a specific way, allowing access to back-end systems or providing unauthorized answers.

- Unauthorized Access to Models: Another significant risk is the potential for adversaries to steal or misuse research models without proper authentication and encryption.

- Compliance Breach: A breach in GDPR, HIPAA, or any other regulation in the sector for losing the data flow in motion.

Strategies to mitigate this dynamic threat model, combined with technical and operational best practices, become even more critical as your models scale and train within multi-tenant cloud environments.

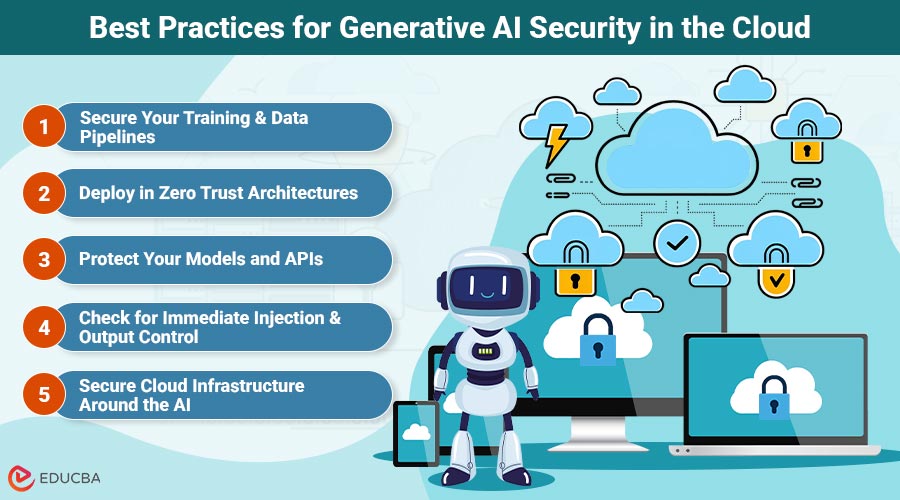

Best Practices for Generative AI Security in the Cloud

Here are some of the best practices for you to secure GenAI projects in the cloud:

1. Secure Your Training and Data Pipelines

The premise of a secure GenAI system begins with practicing data hygiene. Whether tuning LLMs or training diffusion models, the data used for training must be collected, processed, and stored securely.

- Encrypting data at rest and in transit with the help of TLS 1.2+ and AES-256.

- Use ACLs and RBAC with data storage systems (S3, Azure Blob).

- Sanitize all PII and confidential IP from training data.

- Record and monitor all data pipeline operations.

With historical training datasets and real-time prompt logs both securely stored, organizations minimize their risk of exposure to data leakage, a key threat to the security of generative AI.

2. Deploy in Zero Trust Architectures

Strengthen defenses inside and at the edges of your perimeter when moving GenAI workloads to the cloud.

- Adopt a zero-trust approach that presumes anything can compromise your systems.

- Mandate multi-factor authentication (MFA) for all users and administrators.

- Leverage single-tenant VPCs, private endpoints, and network firewalls for ingress and egress controls for your model APIs.

- Combine IAM policies with cloud native services like AWS KMS, Azure Key Vault, or GCP Secret Manager.

Integrating this approach with your Generative AI development services provider helps build layers of defense for both internal and external applications.

3. Protect Your Models and APIs

Your generative models, whether pre-trained, fine-tuned, or open-source, are high-value targets.

- Encrypt the weights of models in your cloud storage or containers.

- Limit the number of calls by a user, and rate-limit API access to prevent brute forcing (or inference pulling).

- Version and monitor your models with ML lifecycle tools like MLflow, SageMaker, or Vertex AI.

- If you are fine-tuning models on your data, use secure ephemeral compute and wipe it after deployment.

Restricting and streamlining them would help reduce the potential for someone to steal, flip, or tamper with them.

4. Check for Immediate Injection and Output Control

Application-level threat detection should be part of generative AI cloud security.

- Seriously, block those suckers using input validation and prompt sanitization.

- Introduce guardrails for identifying unsafe, biased, or manipulative outputs.

- Use either RLHF or RAG to repair such generations.

- Test and red-team your models against adversarial prompts repeatedly to ensure robustness.

For compliance and trust reasons, you also want to monitor the behavior of your model, in particular if it is operating in a regulated environment (healthcare, banking, government).

5. Secure Cloud Infrastructure Around the AI

Your GenAI app is only as secure as the company hosting it.

- You need to leverage containerization, including Docker and Kubernetes, by using hardened base images.

- Utilize least-privilege access to AI Orchestration environments.

- Additionally, enable continuous vulnerability scanning using Prisma Cloud, AWS Inspector, and other tools.

The most common culprits behind cloud breaches are misconfigured infrastructure, rather than the AI tools or APIs themselves.

6. Ensure Compliance and Governance from Day One

Security involves more than just preventing threats; it also means accountability.

- You ned to map how your data flows and track your CCAP, HIPAA, GDPR, and other industry-standard compliances.

- Track everything that appears with your API requests, including data uploads, audit logs, training logs, etc.

- Assess risk and conduct penetration testing by 3rd-party experts.

- Establish a thoughtful AI policy on bias, safety, fairness, and explainability.

It becomes easy to scale ethical and legal GenAI projects that are secure when your project has governance.

What Trusted Partners Do in GenAI Cloud Security?

Building secure generative AI systems in the cloud is not a one-person job. Businesses need to select technology vendors, cloud service providers, and AI developers who value security and transparency. That is why partnering with a dedicated team of a Generative AI development company becomes indispensable.

Look for partners who:

- Demonstrate experience in cloud-native AI security solutions.

- Adhere to best practices in DevSecOps and ML-Ops.

- Offer post-deployment monitoring and support.

- Can assist in compliance documentation and audits.

In high-stakes use cases such as finance, defense, or healthcare, entrusting your AI to vendors with a great security DNA may be the difference between success and misery.

Emerging Trends in Generative AI Security in the Cloud

With the advancements in generative AI, new trends are emerging to counter its threats. Keep an eye on:

- Privacy-preserving federated learning: Decentralized training methods to preserve data privacy and to ensure secure model updates without revealing sensitive information about data during the process.

- Differential privacy techniques: Convenient for any public-facing model that needs to be able to learn from user actions, yet still preserve the privacy of specific people.

- Blockchain-backed model provenance: Ensuring the experiences of trained models (training lineage and model history) cannot be tampered with.

Adopting these trends will help ensure that your generative AI applications more robustly adapt to these evolving threats.

Final Thoughts

Generative AI is powerful, but it also increases the attack surface. As organizations embrace it, they must also prioritize generative AI security in the cloud. By securing data pipelines, enforcing zero-trust principles, protecting models, monitoring outputs, and staying compliant, you can confidently deploy GenAI projects. And with the right partners and emerging tools, your AI innovations can scale securely, without compromise.

Author Bio: Patel Nasrullah

Patel Nasrullah is the co-founder of Peerbits, a global SaaS provider evolving into a scalable AI development company. With over a decade of experience in enterprise-grade digital products, he brings expertise in cloud architecture, intelligent automation, and scalable platforms. Patel leads Peerbits’ AI expansion, delivering custom AI and ML solutions for business value.

Recommended Articles

We hope this guide on generative AI security in the cloud helps you understand the risks and best practices for protecting AI systems. Explore these recommended articles to stay ahead in securing your cloud-based AI workflows.