Updated March 13, 2023

Introduction to tensorflow transformer

Tensorflow transformer is the library or set of libraries mainly used for processing Natural Language Understanding and Natural Language Generation that are also referred to as NLU and NLG, respectively, and helps us by providing the architecture that is generalized for usage. Some of the architectures include GPT-2, BERT, XLM, RoBERT, XLNet, DistilBert, and many others. NLU and NLG come along with more than 100 language recognitions and more than 32 models that are already trained. Along with tensorflow, the transformers can also work very efficiently between the platforms such as PyTorch and Jax.

This article will generally look at tensorflow transformers, how they can be used, the method to follow while searching any model, the steps required for preprocessing, and the model architecture.

What is a tensorflow transformer?

Tensorflow transformers are the pretrained libraries and models that we can use to translate our data set from one language to another in Machine learning models of tensorflow. The main concept on which the transformer works is self-attention. A particular sequence representation can be computed as an input sequence by attending to different positions of the input sequence. Internally a stack containing the layers of self-attention are created by the transformer. Instead of using CNNS and RNNs, Inputs of variable size can be handled by the transformer by using the stack of the layers of self-attention mentioned above. The architecture of stack of layers has many advantages that include –

- When a set of objects is processed, no assumptions are made for spatial or temporal relationships. The best example for this will be starcraft units.

- Instead of serial calculation for output, as in the case of RNN, the outputs can also be obtained by doing parallel calculations.

- There is no need to pass through multiple layers of convolution or RNN steps for affecting the outputs of distant items. For example, scene memory transformer.

- Many sequence tasks face the problem of learning the dependencies that are long-range. However, tensorflow transformers are capable of learning long-range dependencies.

How to use a tensorflow transformer?

Tensorflow Transformer can be used by doing the setup of it by installing it using following commands –

Pip install tensorflow_datasets

The execution of the above command gives the following output –

Further, you should install the tensorflow text package by executing the below command –

pip install -U tensorflow-text

The execution of the above command gives the following output –

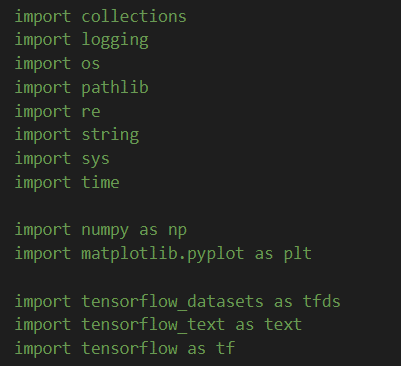

Further, you can start using the tensorflow transformer by importing the packages in your program, such as shown in the below image –

Thereafter, you can try to perform the below steps to use the transformer –

- Tokenize the text by using the token ID

- Input pipeline should be set up.

- Provide positional encoding for attention layers.

- Creation of transformer by using Transformer class.

- Assigning the values of hyperparameters.

- Optimization

- Configuring other metrics of loss.

- Run the inference

- Plotting attention points

- Export

Finding Models

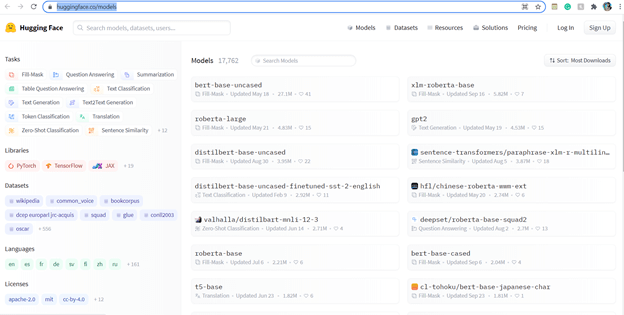

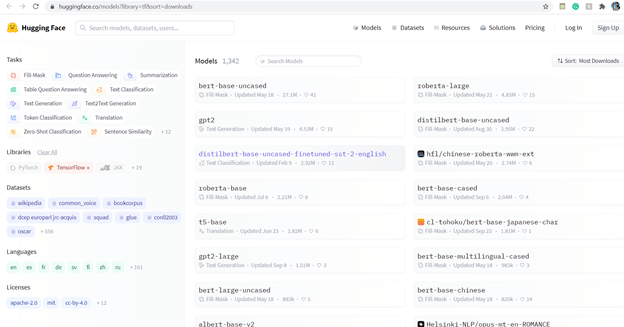

A company named Hugging face have come up with provision to maintain and create the transformers libraries. In order to find the required models, you can easily get the huge list of them and the ready-to-use packages available in this ecosystem. Both types of models built by hugging face and community can be found on the website https://huggingface.co/models. The home page of models screen of this website looks as shown below –

We can add the filters in the search result of the models by using the dropdown provided. We can even search the models based on the framework used to build them, including Tensorflow or python. This will include its classifications, questions, and answers or use cases.

In order to get the list of all the models that are built by using tensorflow, we can simply add this filter and get the most popular models of tensorflow. You just need to click on Tensorflow in the libraries section to apply this filter; we get the screen results shown below. To identify whether the model will be capable of determining whether the model will detect the difference between uppercase and lowercase or not, you can see the remarks as Cased or Uncased Infront of the model name. This plays a key role in text sentiment understanding.

Preprocessing steps

The steps required to use the tensorflow transformer are as specified below –

- Data preprocessing

- Model and tokenizer of hugging face should be initialized

- In order to get the attention masks and input ids, the input data should be encoded.

- A complete model architecture should be created and build by integrating with the hugging face model.

- Metrics, loss, and optimizer should be set.

- The model should be trained.

The pre-processing of the input data so that it can be used for the transformer model can be done by preparing our data. It depends upon the use case and what data you have. Using a tensorflow transformer, we can convert the string values present in our data to integers by creating a vocabulary out of all the input values. Other than this, we can also convert the float values to integers and normalize the values of input by using standard deviation and mean. Finally, this preprocessed data can be sent for training the model.

We can also use Apache Beam by running it directly and providing the input values of raw data, metadata of raw data, and a function that we have created to transform our raw data to a dataset that can be supplied as input to our model.

Model Architecture

The model architecture of the Tensorflow transformer is as shown in the below figure –

<image>

It contains the inputs and then positional encodings. The add and norm feature and multi-head attention using the attention layers can be observed to generate the output.

Conclusion

The tensorflow transformer is used for creating and using the Natural Language Understanding and Natural Language generation. Along with that, it has many other applications, as discussed in the above article. So using any of the models for transformer and getting the same is very easy as it is available on the official site of Hugging face.

Recommended Articles

This is a guide to a tensorflow transformer. Here we discuss what are tensorflow transformers, how they can be used, the method to follow while searching any model. You may also have a look at the following articles to learn more –