Updated March 15, 2023

Introduction to Keras LSTM

Keras LSTM stands for the Long short-term memory layer, which Hochreiter created in 1997. This layer uses available constraints and runtime hardware to gain the most optimized performance where we can choose the various implementation that is pure tensorflow or cuDNN based. Super fast implementation is being used by this layer if there is an availability of GPU, and along with that, all the required parameters and arguments are met by the layer as well as the cuDNN kernel.

In this article, we will study Keras LSTM and topics corresponding to it, such as Keras LSTM, how to create Keras LSTM, Keras LSTM networks, Keras LSTM architecture, and Keras LSTM model, examples, and finally, our conclusion on the same.

What is Keras LSTM?

Recurrent Neural Networks that are RNNs can keep track of and remember the features of outputs and inputs. But there are certain limitations to what it can do and how long RNN will be able to remember. There are a few cases where the previous output that is immediate is not enough for the prediction of what will come next. Therefore, there is a necessity for the network to depend on the info from additional previous output.

Let us consider one example. We have the sentence “I live in India, and I can speak Hindi” and the phrase “the green grass.” For prediction of the words, bold inside the first phrase. Green will be the immediate output on which the RNN will rely, while to predict “Hindi,” we will have to go through the network and overlook the further objects in the output. For this, we can say that it is a long-term dependency. It becomes almost impossible for RNN to connect the info and learn from it as the gap between words and phrases keeps growing.

In such cases, the LSTM, that is, Long short-term memory networks, prove to help avoid long-term dependency problems. There are four different layers of the neural network, and the module works repetitively to deal with long-term dependency.

How to Create Keras LSTM?

To create the LSTM model, we will have to follow the below-mentioned steps –

- Creating Network definition.

We can define the network simply by creating the sequential model and then adding the dense and LSTM() for predictions and recurrent network creation, respectively –

Our code snippet would be similar to that shown below –

sampleEducbaModel = Sequential()

sampleEducbaModel.add (LSTM(2))

sampleEducbaModel.add (Dense(1))

sampleEducbaModel.add (Activation(‘sigmoid’))

print(“Model Created Successfully!”)

Instead of the above code, we can also define the layers in an array and then create the model –

layersToBeIncluded = [LSTM(2), Dense(1), Activation(‘sigmoid’)]

sampleEducbaModel = Sequential(layersToBeIncluded)

We can make use of the prediction models such as regression, binary classification, multiclass classification, etc, according to our convenience and requirement.

The output of above codes –

- Compilation of the created network

For compiling, we will write the following code snippet –

educbaAlgo = SGD(momentum = 0.3, lr = 0.1, metrics = [‘accuracy’])

sampleEducbaModel.compile(loss = ‘mean squared error’, optimizer = ‘sqd’)

print(“Compilation done!”)

The output of above snippet –

- Fitting the network

For fitting the model or network of LSTM that we have created, we will use –

maintainHistory = sampleEducbaModel.fit(X, y, size of batch = 10, epochs = 100, verbose = 0)

print(“Mechanism created for maintaining history.”)

The output of above snippet –

- Network evaluation

We can make the use of the following code snippet for the evaluation of the network –

Acquired_loss, achieved_accuracy = sampleEducbaModel.evaluate(X, y, verbose = 0)

Print(“Evaluation of model completed”)

The output of the above snippet –

- Making the predictions according to the necessity

Lastly, for predictions, we will make the use of the following code snippet –

Resultant_predictions = sampleEducbaModel.predict(X, verbose = 0)

Print(“Made the use of model for prediction!”)

The output of the code snippet is –

Keras LSTM networks

LSTM, which stands for long short-term memory network, is a special kind of RNN that can perform learning from long-term dependencies, which is a problem when using simple RNN. LSTM was developed and published in 1997 by schmidhuber and Hochreiter and soon became very popular due to its usage, performance, and requirement in many scenarios.

LSTM can remember the information for a long time and have this as their default inbuilt mechanism. So we don’t need to make any additional efforts for it.

RNN, that is, Recurrent neural networks have a chain of repeating modules containing their neural network.

Keras LSTM model

Tf.Keras. Layers.LSTM is the class that helps us create the LSTM models. This class requires various parameters to define the model’s behavior. Below is the list of some of the arguments out of which some are optional while some are compulsory to specify –

- units

- activation

- recurrent_activation

- use_bias

- kernel_initializer

- recurrent_initializer

- bias_initializer

- unit_forget_bias

- kernel_regularizer

- recurrent_regularizer

- bias_regularizer

- activity_regularizer

- kernel_constraint

- recurrent_constraint

- bias_constraint

- recurrent_dropout

- return_sequences

- return_state

- go_backwards

- stateful

- time_major

- unroll

- inputs

- mask

- training

- initial_state

Examples

Let us take one example to demonstrate the implementation of the Keras LSTM network, its creation, and use for predictions –

# Importing the required objects from libraries for learning the sampleEducbaSequence

from pandas import DataFrame

from pandas import concat

from Keras.sampleEducbaModels import Sequential

from Keras.layers import Dense

from Keras.layers import LSTM

# sampleEducbaSequence creation

totalLength = 10

sampleEducbaSequence = [i/float(totalLength) for i in range(totalLength)]

print(sampleEducbaSequence)

# x - y pairs are created

sampleDataFrameObj = DataFrame(sampleEducbaSequence)

sampleDataFrameObj = concat([sampleDataFrameObj.shift(1), sampleDataFrameObj], axis=1)

sampleDataFrameObj.dropna(inplace=True)

# conversion of the created inputSampleValues to LSTM friendly structure

inputSampleValues = sampleDataFrameObj.values

X, y = inputSampleValues[:, 0], inputSampleValues[:, 1]

X = X.reshape(len(X), 1, 1)

# 1. network definition

sampleEducbaModel = Sequential()

sampleEducbaModel.add(LSTM(10, input_shape=(1,1)))

sampleEducbaModel.add(Dense(1))

# 2. network is compiled here

sampleEducbaModel.compile(optimizer='adam', loss='mean_squared_error')

# 3. we will need to fit the created network

maintainHistoryObj = sampleEducbaModel.fit(X, y, epochs=1000, batch_size=len(X), verbose=0)

# 4. network evaluation needs to be done

calculatedLoss = sampleEducbaModel.evaluate(X, y, verbose=0)

print(calculatedLoss)

# 5. we can make the required achievedPredictions by using the created network

achievedPredictions = sampleEducbaModel.predict(X, verbose=0)

print (achievedPredictions[:, 0])

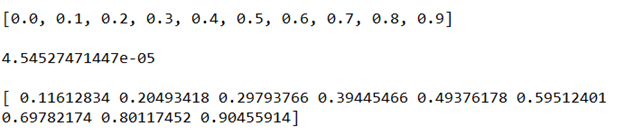

The output of the execution of the above code is as shown in the below image –

Conclusion

Keras LSTM network is used as an alternative to simple RNN as it involves the capability to resolve the issue of remembering long short memory.

Recommended Articles

This is a guide to Keras LSTM. Here we discuss the introduction and networks of LSTM in Keras along with the examples and model. You may also look at the following articles to learn more –