What is Few-Shot Learning?

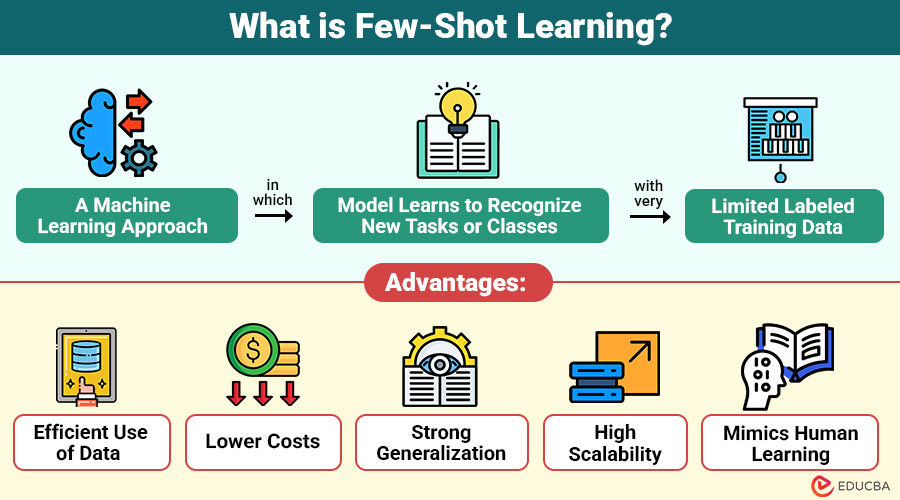

Few-shot learning is machine learning approach in which a model learns to recognize new tasks or classes with very limited labeled training data. Instead of requiring thousands of examples, FSL enables the model to generalize from a few samples by leveraging prior knowledge or learning principles.

Few-shot learning settings include:

- One-Shot Learning: Learning from just one example.

- Few-Shot Learning: Learning from 2–10 examples.

- Zero-Shot Learning: Making predictions without any labeled samples (using descriptions instead)

Table of Contents:

- Meaning

- Why Does Few-Shot Learning Matter?

- Working

- Types

- Few-Shot Learning vs Traditionl Machine Learning

- Advantages

- Disadvantages

- Real-World Examples

Key Takeaways:

- Few-shot learning enables models to learn to interpret unfamiliar categories with minimal examples rapidly, improving adaptability across diverse tasks.

- Meta-learning foundations allow few-shot models to extract transferable knowledge, supporting efficient learning in dynamic real-world environments.

- Generative and metric-based approaches strengthen few-shot performance by enhancing representation quality even with scarce training data.

- Few-shot techniques let AI learn from just a few examples, so you don’t need huge labeled datasets. This makes it faster and easier to deploy AI, especially when data, time, or resources are limited.

Why Does Few-Shot Learning Matter?

Few-shot learning addresses the biggest limitations of traditional ML models:

1. Reduces Data Dependency

It lowers the need for large labeled datasets, making model training cheaper, faster, and more efficient across domains.

2. Enables Rapid Adaptation

Models quickly learn new classes or tasks with minimal examples, allowing fast updates in industries where conditions and requirements constantly change.

3. Supports Rare or Novel Events

It helps identify rare patterns or anomalies where data is scarce, improving accuracy in fields such as healthcare, fraud detection, and security.

4. Boosts Personalization

AI systems produce personalized predictions using small amounts of user-specific data, enabling more tailored experiences without the need for massive datasets.

How Does Few-Shot Learning Work?

Few-shot learning works through a learning paradigm called meta-learning, or “learning to learn.” Instead of training on a single large task, FSL trains on many small tasks, enabling the model to learn a generalizable prior.

Process:

1. Meta-Training

- Train on many “episodes”

- Each episode contains a few labeled examples (the support set) and a set of query examples.

- The model learns patterns that generalize well.

2. Meta-Testing

- When a new class appears with only a few samples

- The model quickly adapts using what it learned during meta-training

Key Components:

- Support Set: Small labeled examples (e.g., 1–5 images/class)

- Query Set: Unlabeled samples to evaluate the model during training

- Embedding Network: Extracts features and representations

- Similarity Metric or Adaptation Mechanism: Helps classify new tasks

Types of Few-Shot Learning Approaches

Here are the major types of few-shot learning that enable effective learning from minimal examples:

1. Metric-Based Methods

Learn a discriminative embedding space to compare support and query samples using similarity metrics, enabling effective classification with minimal labeled examples.

2. Optimization-Based Methods

Train models that rapidly adapt to new tasks through meta-learned initialization or update rules, enabling fast learning from extremely small datasets.

3. Memory-Based Methods

Use external memory modules to store and retrieve past experiences, helping models generalize from few examples in sequential or reasoning tasks.

4. Data Augmentation & Generative Methods

Use AI to create fake but realistic examples so small datasets become bigger, helping models learn better—especially for categories with very few real samples.

Few-Shot Learning vs Traditional Machine Learning

Here is a quick comparison highlighting how few-shot learning differs from traditional machine learning across key aspects:

| Aspect | Few-Shot Learning | Traditional Machine Learning |

| Data Requirement | Very small datasets | Large datasets |

| Training Time | Low | High |

| Generalization | High | Limited |

| Use Case | Rare/novel/fast adaptation tasks | Common/abundant data |

| Dependency on Prior Knowledge | Very high | Low |

Advantages of Few-Shot Learning

Here are the advantages that make few-shot learning powerful for low-data, fast-adaptation machine learning scenarios:

1. Efficient Use of Data

Learns meaningful patterns from very small datasets, enabling accurate predictions even when only a few labeled examples are available for training.

2. Lower Costs

Minimizes the need for expensive data collection and manual labeling, significantly reducing overall development costs for machine learning projects and applications.

3. Strong Generalization

Adapts quickly to unseen classes or tasks by leveraging meta-learned knowledge, ensuring robust performance even in unpredictable or evolving environments.

4. High Scalability

It can quickly adapt to new uses without needing a lot of retraining, making it perfect for fast-growing industries and changing business needs.

5. Mimics Human Learning

Enables AI systems to learn new concepts from minimal exposure, closely resembling human cognitive learning abilities and improving adaptability across tasks.

Disadvantages of Few-Shot Learning

Here are the main disadvantages that can affect the reliability and practicality in real-world use:

1. Unfamiliar Classes

Accuracy degrades when new tasks differ greatly from training data, making generalization difficult for highly unfamiliar categories.

2. Sensitive to Noise

Even with very few examples, minor label noise significantly harms learning accuracy and destabilizes the model’s performance.

3. Heavy Meta-Training

Meta-training across many tasks demands substantial computational resources, thereby increasing training time and hardware requirements.

4. Limited Applicability

Certain domains inherently require large datasets, making unsuitable for tasks that require high data-driven accuracy.

Real-World Examples

Here are some real-world examples that demonstrate how few-shot learning delivers strong performance even with minimal data:

1. FaceNet and Siamese Networks for Face Recognition

It allows a system to identify a person using just one picture, making it useful for unlocking devices, checking identity for security, and surveillance—all without needing a lot of training data.

2. Prototypical Networks in Industrial Defect Detection

Manufacturers detect thousands of defects by training models on only a few example images, improving quality control and reducing manual inspection efforts.

3. Rare Species Recognition in Wildlife Projects

It identifies rare animals from limited photos, supporting biodiversity research, conservation monitoring, and automated analysis of wildlife camera trap images.

Final Thoughts

Few-shot learning is a breakthrough paradigm that redefines how machines learn. Instead of depending on massive datasets, FSL enables models to generalize from a handful of examples—bringing AI training closer to human-like intelligence. Its applications span healthcare, finance, retail, robotics, and natural language processing, making it one of the most important innovations in modern AI. With rapid advancements in meta-learning and generative models, the future of few-shot learning promises even greater adaptability, speed, and intelligence.

Frequently Asked Questions (FAQs)

Q1. Is Few-Shot Learning used in large language models?

Answer: Yes. GPT models use few-shot prompting to perform new tasks without retraining.

Q2. Can Few-Shot Learning be applied to NLP tasks?

Answer: Yes. Few-shot learning is widely used in NLP tasks such as text classification, sentiment analysis, named entity recognition, and question answering, using models like GPT and BERT.

Q3. Does Few-Shot Learning work well with highly imbalanced datasets?

Answer: Yes. FSL is an ideal solution for imbalanced datasets because it learns effectively even when certain classes have extremely limited labeled samples.

Q4. Is Few-Shot Learning suitable for real-time applications?

Answer: Yes. Once trained, FSL models adapt rapidly to new classes, making them effective for real-time applications such as surveillance, robotics, and anomaly detection.

Recommended Articles

We hope that this EDUCBA information on “Few-Shot Learning” was beneficial to you. You can view EDUCBA’s recommended articles for more information.