What is an AI Context Window?

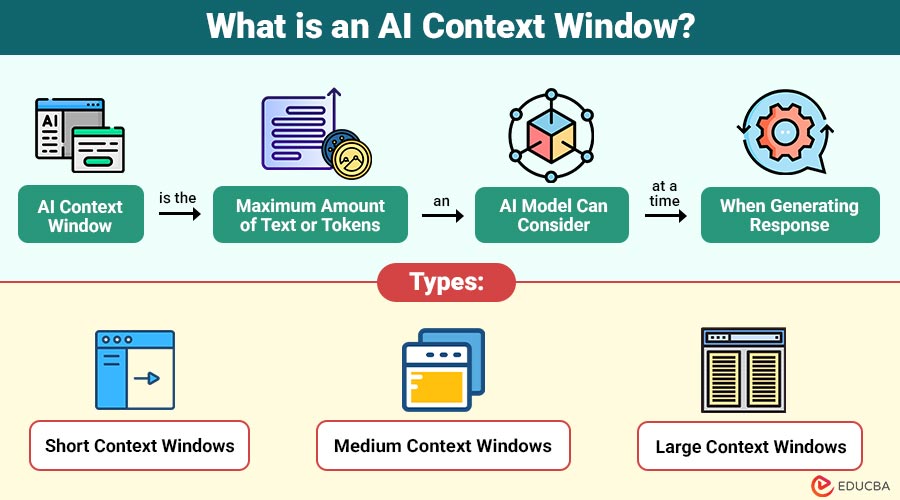

An AI context window is the maximum amount of text or tokens an AI model can consider at a time when generating response. It defines how much prior information—such as user instructions, conversation history, or documents—the model can “remember” during a single interaction.

Table of Contents:

- Meaning

- Why Does AI Context Window Matter?

- Working

- Types

- Benefits

- Limitations

- Use Cases

- Best Practices

Key Takeaways:

- AI context windows determine how much information models consider, directly influencing coherence, accuracy, and continuity.

- Larger context windows improve performance on long-form tasks and instruction-following but significantly increase computational cost and latency.

- Effective prompt structuring and summarization help maximize value within the limited context window constraints for users.

- Context windows are temporary memory, not true understanding, requiring best practices to avoid information loss.

Why Does AI Context Window Matter?

The AI context window plays a pivotal role in determining how effectively an AI can process, understand, and respond to complex instructions.

1. Conversation Continuity

A larger context window allows AI systems to remember prior inputs, maintain a consistent tone, and provide coherent responses. This is especially critical in:

- Customer support chatbots

- Personal AI assistants

- Long-form content generation

2. Long-Form Content Generation

AI writers and content creation tools benefit significantly from a broader context window. With more context available:

- Logical flow across sections is maintained

- AI reduces repetition and contradictions

- AI can generate extended narratives or research papers.

3. Code and Technical Analysis

For AI-assisted programming, a larger context window allows:

- Understanding of entire codebases

- Analysis of dependencies and relationships between modules

- Debugging assistance for complex programs

How Does an AI Context Window Work?

Below are the key steps that explain how an AI context window operates in a typical interaction.

Step 1: Input Collection

The AI collects:

- System messages

- User prompts

- Conversation history

Step 2: Tokenization

The system converts all input into tokens.

Step 3: Context Limit Check

If the total token count exceeds the context window size:

- The system drops older tokens.

- The system prioritizes recent messages.

Step 4: Response Generation

The AI generates output using only the tokens within the active context window.

Step 5: New Context Formation

The response itself becomes part of the context for the next interaction.

Types of AI Context Windows

Below are the main types, categorized by capacity and typical use cases.

1. Short Context Windows

- Suitable for simple Q&A

- Fast and cost-efficient

- Limited understanding of long conversations

2. Medium Context Windows

- Ideal for blogs, documentation, and analysis

- Balanced performance and cost

3. Large Context Windows

- Used for legal, research, and enterprise workloads

- Can process entire books or datasets

- Higher computational cost

Benefits of AI Context Window

Below are the benefits of using a large AI context window in advanced, long-form use cases.

1. Improved Coherence

The AI maintains logical flow and narrative consistency across long responses, conversations, or complex documents.

2. Better Instruction Following

The system retains multiple rules, constraints, and objectives, enabling it to adhere to them accurately throughout extended prompts.

3. Reduced Hallucinations

Access to a broader context minimizes guesswork, lowering the chances of incorrect facts or fabricated details.

4. Enhanced User Experience

Users repeat instructions less often, enjoying smoother, faster, and more natural interactions with AI.

Limitations of AI Context Windows

Despite their advantages, context windows have several limitations.

1. Token Constraints

Even expanded context windows remain finite, preventing unlimited memory and eventually forcing older information to be truncated.

2. Performance and Cost

Larger context windows require more computation, increase response latency, and significantly raise overall infrastructure and operational costs.

3. Information Dilution

Excessive context may dilute attention, reducing focus on critical details and producing broader, less precise outputs and responses.

4. No True Understanding

AI lacks genuine comprehension, relying on statistical pattern prediction rather than human-like understanding of meaning or intent.

AI Context Window in Real-World Use Cases

Below are practical use cases that demonstrate how they deliver significant value across industries.

1. Content Marketing

Large context windows help marketers create long-form content with consistent messaging, keywords, tone, and strategic alignment.

2. Customer Support

Chatbots leverage large context windows to track issues, recall history, and deliver personalized customer support experiences.

3. Software Development

Developers use large context windows to review code, refactor systems, and debug across multiple files efficiently.

4. Research and Education

AI uses large contextual windows to summarize research, review the literature, and effectively create structured educational materials.

Best Practices for Using AI Context Windows

Given below are proven best practices to maximize accuracy, efficiency, and output quality when working within AI context windows.

1. Be Clear and Structured

Clear headings, bullet points, and numbered instructions help AI interpret prompts accurately, efficiently, and consistently.

2. Avoid Unnecessary Information

Removing irrelevant text preserves token space, improves focus, and prevents dilution of important context details.

3. Summarize When Needed

Request summaries of prior content to retain essential information while freeing valuable context space.

4. Chunk Large Tasks

Divide long documents into smaller sections to improve processing accuracy and manage context limits effectively.

5. Restate Key Instructions

Repeat critical constraints in long conversations to ensure compliance despite context window limitations and truncation.

Final Thoughts

The AI context window defines how effectively models process, retain, and respond to information during tasks or conversations. Its size directly impacts performance across content creation, customer support, analytics, and software development. Understanding how it works, its benefits, and its limitations enables better prompting, fewer errors, and greater value. Future advances will deliver smarter, more efficient context handling for AI systems.

Frequently Asked Questions (FAQs)

Q1. What happens when an AI context window is exceeded?

Answer: Older information is dropped, and the AI no longer considers it when generating responses.

Q2. Is a larger context window always better?

Answer: Not always. Larger windows improve memory but increase cost and processing time.

Q3. Can users control the context window size?

Answer: Typically, this is determined by the AI model, though prompt design can optimize usage.

Q4. Does the context window store personal data?

Answer: No. It only temporarily processes information during a session.

Recommended Articles

We hope that this EDUCBA information on “AI Context Window” was beneficial to you. You can view EDUCBA’s recommended articles for more information.